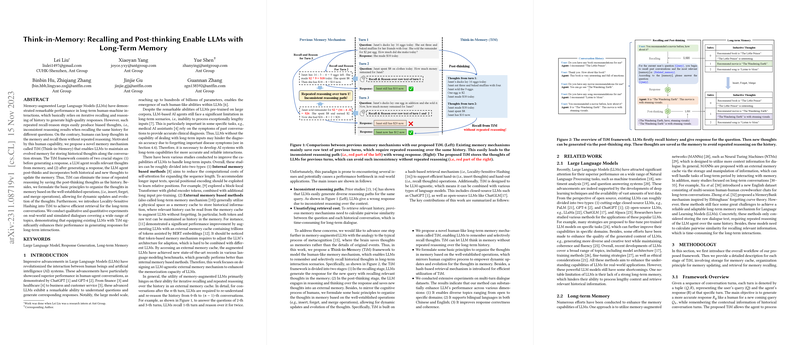

The paper introduces Think-in-Memory (TiM), a novel memory mechanism designed to augment LLMs with long-term memory capabilities by enabling them to remember and selectively recall historical thoughts in long-term interaction scenarios. The motivation stems from the limitations of existing memory-augmented LLMs, which rely on iterative recalling and repeated reasoning over the history in an external memory cache, leading to inconsistent reasoning paths and high retrieval costs. TiM addresses these issues by saving thoughts as memories, similar to the metacognition process in humans, rather than saving the details of original events.

The TiM framework consists of two stages:

- In the recalling stage, LLMs generate responses to new queries by recalling relevant thoughts from memory.

- In the post-thinking stage, the LLM engages in reasoning and thinking about the response and saves new thoughts into an external memory.

To mirror the cognitive process of humans, the paper formulates basic principles to organize thoughts in memory based on well-established operations, such as insert, forget, and merge, allowing for dynamic updates and evolution of the thoughts. TiM utilizes Locality-Sensitive Hashing (LSH) to facilitate efficient hand-in (insert thoughts) and hand-out (recall thoughts) operations. TiM is designed to be LLM-agnostic, enabling its integration with both closed-source LLMs like ChatGPT and open-source LLMs like ChatGLM.

The key components of TiM are:

- Agent : A pre-trained LLM model to facilitate dynamic conversations.

- Memory Cache : A continually growing hash table of key-value pairs, where the key is the hash index and the value is a single thought.

- Hash-based Mapping : LSH is introduced to quickly save and find relevant thoughts in .

The paper defines an "inductive thought" as text containing the relation between two entities, satisfying a relation triple , where is the head entity connected with the tail entity via the relation .

The paper utilizes a hash table as the architecture of TiM’s storage system, where similar thoughts are assigned the same hash index. The LSH method assigns each -dimension embedding vector to a hash index , where nearby vectors get the same hash index with higher probability. The hash function is defined as:

where:

- is the -dimension embedding vector

- is a random matrix of size

- is the number of groups in the memory

The memory retrieval operates as a two-stage retrieval task for the most relevant thoughts: LSH-based retrieval followed by similarity-based retrieval.

The organization principles based on operations for dynamic updates and evolution of thoughts supported by TiM include:

- Insert: storing new thoughts into the memory.

- Forget: removing unnecessary thoughts from the memory, such as contradictory thoughts.

- Merge: merging similar thoughts in the memory, such as thoughts with the same head entity.

The paper adopts Low-Rank Adaptation (LoRA) for computation-efficient fine-tuning. LoRA fine-tunes according to , where , , , and .

The paper evaluates TiM on three datasets: KdConv, Generated Virtual Dataset (GVD), and Real-world Medical Dataset (RMD). The LLMs used are ChatGLM and Baichuan2. The baselines include answering questions without any memory mechanism and SiliconFriend.

The evaluation metrics include:

- Retrieval Accuracy

- Response Correctness

- Contextual Coherence

On the GVD dataset, TiM exhibited superior performance across all metrics compared to SiliconFriend, especially for contextual coherence. On the KdConv dataset, TiM obtained the best results across all topics (film, music, and travel). On the RMD dataset, TiM improved the overall response performance for real-world medical conversations, with significant improvements in response correctness and contextual coherence. Retrieval time was also reduced using TiM compared to calculating pairwise similarity between the question and the whole memory.

A medical agent, TiM-LLM, was developed based on ChatGLM and TiM in the context of patient-doctor conversations. TiM-LLM serves as an auxiliary tool for clinical doctors to provide treatment options and medical suggestions for patients' needs.