Overview of SPHINX: Advancements in Multi-modal LLMs

The paper introduces SPHINX, a multi-modal LLM (MLLM) engineered to enhance the integration of visual and linguistic modalities for diverse applications. This model distinguishes itself by implementing a joint mixing approach that amalgamates model weights, tuning tasks, and visual embeddings, setting a new benchmark for multi-purpose visual instruction-following capabilities.

Key Contributions

- Unfreezing and Weight Mixing:

To bolster vision-language alignment, SPHINX diverges from traditional LLMs by unfreezing weights during pre-training. A notable strategy involves weight mixing between models trained on real-world and synthetic data. By linearly combining these domain-specific models, SPHINX adeptly incorporates diverse semantic knowledge while maintaining robustness.

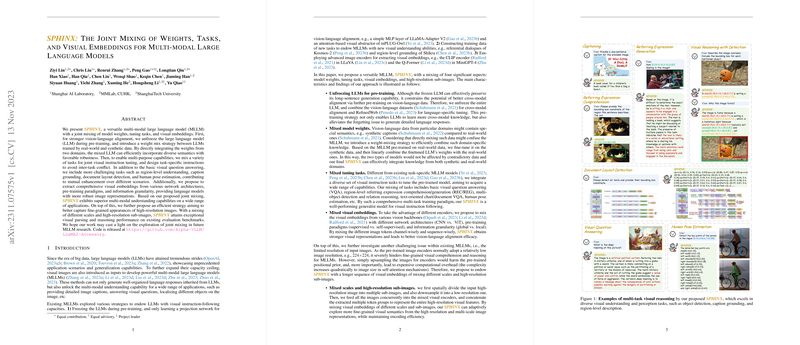

- Multifaceted Task Integration:

SPHINX's robustness is further elevated by mixing various visual tasks, each with distinct instructions to mitigate inter-task conflicts. Beyond basic visual question answering, SPHINX tackles complex tasks such as region-level understanding and human pose estimation. This extensive task integration fosters mutual capability enhancement across scenarios.

- Rich Visual Embeddings:

The model capitalizes on embedding extraction from multiple network architectures with varying pre-training paradigms and granularity levels. This comprehensive visual representation is achieved by mixing embeddings from both global and local contexts, enhancing SPHINX's visual parsing abilities.

Practical Implications

SPHINX's design shows promising advancements in multi-modal understanding, facilitating superior performance across diverse application areas. The model's capability to handle high-resolution images through a novel mixing strategy—processing multiple scales and sub-images—addresses a significant constraint present in existing MLLMs.

The proposed approach not only advances visual reasoning and parsing but also lays the groundwork for integrating with other visual foundation models, such as SAM and Stable Diffusion, for broader functional applications like language-referred segmentation and image editing.

Experimental Validation

SPHINX demonstrates impressive results on established benchmarks, outperforming state-of-the-art models in various tasks. The model's performance underscores its effectiveness in adapting to different domains and tasks, suggesting broader applicability in real-world settings.

Theoretical Implications and Future Prospects

Theoretically, SPHINX's joint mixing strategy offers a novel paradigm for synergizing different types of data and tasks within a unified model framework. This innovative approach could inspire future MLLM research to further explore domain-specific fine-tuning and task integration.

Future research may extend SPHINX's capabilities by incorporating additional modalities or expanding on task diversity, thereby pushing the boundaries of AI's interpretive and generative proficiency across modalities.

In summary, SPHINX represents a significant step forward in multi-modal AI integration, combining sophisticated strategies to enhance its overall functionality and adaptability. This paper establishes foundational work that could propel future developments in AI research, particularly within the domain of multi-modal LLMs.