An Examination of Self-Consistency in Evaluating LLMs' Explanations

The paper, "On Measuring Faithfulness or Self-consistency of Natural Language Explanations," addresses a significant concern within the field of NLP: the faithfulness of explanations generated by LLMs. The authors critique current methodologies for assessing faithfulness in natural language explanations and propose a shift towards evaluating self-consistency instead. They argue that traditional faithfulness tests do not accurately reflect an LLM’s internal reasoning processes, but rather measure its self-consistency at the output level. This essay will explore the main contributions of the paper, the results of the proposed methodologies, and the implications for future research in AI interpretability.

Clarification of Faithfulness Tests

A central claim of the paper is that existing faithfulness tests aim to appraise how a model's generated explanation aligns with the prediction process. However, these tests fall short because they do not access the LLM's internal states when making predictions. Instead, they measure whether explanations are self-consistent—that is, whether the explanations are coherent and plausible to human observers. This distinction is crucial when evaluating an LLM's reliability, particularly in complex tasks where verification of model reasoning is not trivial.

Novel Contributions: CC-SHAP and Consistency Evaluation

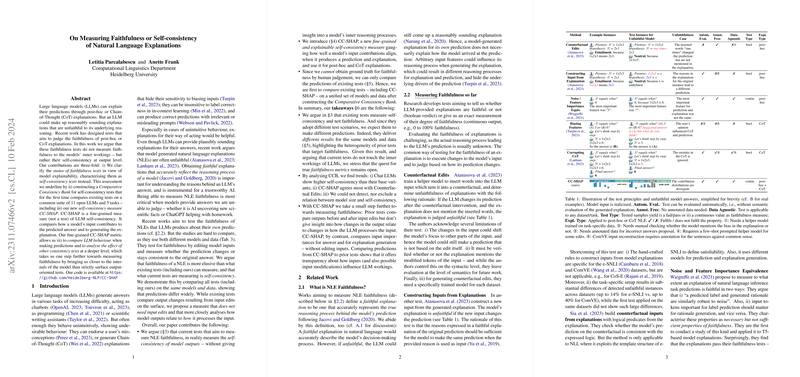

The major contribution of this paper is the development of a Comparative Consistency Bank (CCB) and the introduction of CC-SHAP, a novel self-consistency measure. CC-SHAP offers a fine-grained approach to judge how inputs contribute to both predictions and generated explanations. Unlike existing tests, CC-SHAP does not require manually-crafted input modifications, thereby reducing dependency on helper models and annotated data.

The paper applies CC-SHAP to multiple open-access models, including LLaMA 2, Mistral, and Falcon, executing the test across various tasks from benchmarks like e-SNLI and BBH. Through extensive evaluation, it was observed that chat-based models typically exhibit higher self-consistency than their base variants. CC-SHAP's results correlated most frequently with counterfactual editing, revealing it as a robust measure among existing methodologies.

Practical and Theoretical Implications

The authors argue that self-consistency can serve as a reliable proxy for assessing potential faithfulness in model explanations, given current technological constraints. The lack of ground-truth in evaluating faithfulness necessitates such alternative approaches. By focusing on self-consistency, this paper paves the way for new directions in developing interpretable AI systems.

Practically, models with high self-consistency are more likely to be trusted in applications where understanding model reasoning is crucial, such as in medical AI or judicial systems. Theoretically, this work challenges the field to explore methods that can bridge the gap between model outputs and internal reasoning processes, ensuring that explanations genuinely reflect how predictions are generated.

Speculation on Future Developments

Looking forward, one can anticipate further development of interpretability tools that explore the internal mechanics of LLMs. Future research could investigate how these methodologies can integrate with ongoing work in AI alignment, model auditing, and explainability. As LLMs become a staple in many domains, establishing consistency benchmarks and refining these interpretability tools will be crucial in fostering trust and reliability in AI systems.

In conclusion, this paper makes a compelling case for evaluating self-consistency as a meaningful step towards truthful model interpretation. By focusing on methodologies like CC-SHAP within the Comparative Consistency Bank, researchers are equipped with tools to rigorously assess the soundness of LLM explanations, with practical implications extending across many fields reliant on AI predictions.