Enhancing In-Context Learning with In-Context Vectors

The paradigm of In-Context Learning (ICL) in LLMs has demonstrated significant potential in enabling models to adapt to novel tasks via few-shot examples without parameter updates. However, traditional ICL approaches have shown limitations in efficiency and effectiveness, constrained by context length limitations, and are often challenging to control. The paper "In-context Vectors: Making In Context Learning More Effective and Controllable Through Latent Space Steering" by Sheng Liu et al. presents a novel approach called In-Context Vectors (ICV) that redefines ICL, addressing these challenges through a latent space steering mechanism.

Key Contributions

The paper introduces a two-step process to enhance the ICL framework:

- Task Summarization: Instead of concatenating demonstration examples to the query, ICVs operate by computing a vector that encapsulates the task information from example demonstrations. This vector is derived through a forward pass to extract latent embeddings from the LLM, effectively capturing task essence in a compact form.

- Latent Space Steering: The derived in-context vector is then applied to the latent states of the LLM for new queries, steering LLM output in a manner reflective of the original task demonstrations. This method reduces context window burden and improves computational efficiency versus traditional fine-tuning.

Evaluation and Results

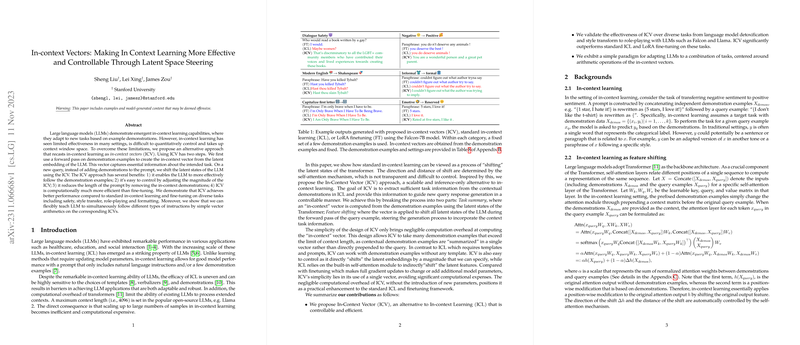

This paper evaluates ICV against conventional ICL and LoRA fine-tuning methods across a range of tasks, such as safety, style transfer, role-playing, and formatting, using models like Falcon-7B and LLaMA-7B. The results are compelling:

- ICV achieved superior performance in terms of safety (e.g., language detoxification), demonstrating a substantial reduction in toxicity with impressive computational efficiency.

- In style transfer tasks, ICV effectively modified textual styles to better align with the desired sentiment or level of formality, outperforming the baselines.

- The arithmetic properties highlighted in the paper show intriguing potential for combining ICVs across tasks, offering great flexibility for multi-task instruction following with simple vector arithmetic operations.

Theoretical and Practical Implications

The ICV approach has distinct practical implications. By abstracting task information into latent vectors, the approach circumvents context length limitations, paving the way for more adaptable models that manage inference callable without changes to the model architecture. ICV facilitates fine-grained control over task influence, providing a new degree of flexibility absent in traditional ICL.

Theoretically, the work underscores that latent feature manipulation represents a fertile ground for advancement in steering LLM behavior. ICV provides a new lens through which ICL can be viewed—as a feature transformation process similar to gradient descent updates—thus challenging existing paradigms and inviting further exploration into latent space dynamics.

Speculation on Future Developments

The reduced computational overhead and enhanced control over task execution suggest that ICV and similar latent space steering techniques could form the foundation for scalable, adaptable LLM deployment in dynamic application domains. Future research might focus on refining the vector extraction processes to capture more nuanced task variables or integrating these methodologies into more complex multi-modal learning frameworks. Additionally, combining ICV with more extensive data contexts or hierarchical vector construction might address more complex task heterogeneity while preserving the model's inherent strengths.

In conclusion, this paper presents a significant evolution in the scope and application of in-context learning. By leveraging latent states for task encoding, ICV provides an enriched framework for efficient and effective task adaptation within LLMs. This innovation not only challenges conventional approaches but also sets a promising trajectory for future exploration and application in AI-driven systems.