Overview of "JARVIS: Open-world Multi-task Agents with Memory-Augmented Multimodal LLMs"

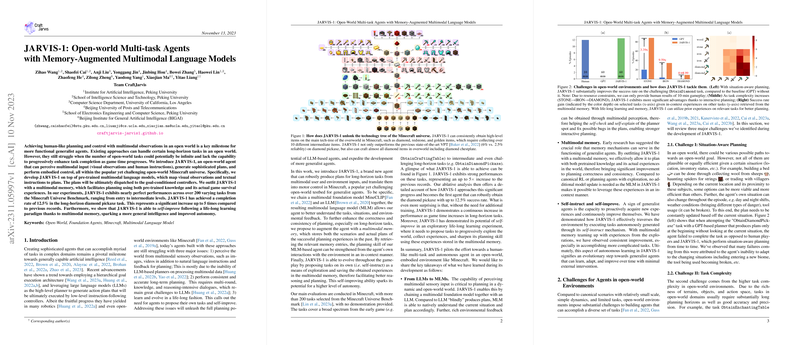

The paper entitled "JARVIS: Open-world Multi-task Agents with Memory-Augmented Multimodal LLMs" presents a novel approach to creating AI agents that can handle a diverse range of tasks in complex, open-world environments. Specifically focusing on the Minecraft universe, the authors introduce a system named JARVIS, which leverages pre-trained Multimodal LLMs (MLMs) to interpret visual and textual inputs, generate detailed plans, and execute these plans in a human-like manner.

Main Contributions

The primary contributions of this paper revolve around the integration of MLMs into a cohesive framework capable of addressing the distinctive challenges posed by open-world environments:

- Multimodal Inputs and High-level Planning: JARVIS employs a fusion of visual observations and natural language inputs to generate action plans. This capability is critical for handling the dynamic and complex nature of open-world environments such as Minecraft.

- Memory-Augmented System: One of the standout features of JARVIS is its incorporation of a multimodal memory. This memory system allows the agent to store and retrieve past experiences, thereby enhancing its planning accuracy and adaptability over time without additional training.

- Enhanced Task Completeness: The ability to perform both short and long-term tasks, with performance levels surpassing previous models, underscores JARVIS’s efficacy. In particular, JARVIS achieves nearly perfect results on short-horizon tasks and significantly outperforms state-of-the-art models on long-horizon tasks like obtaining a diamond pickaxe.

Numerical Results and Capabilities

JARVIS demonstrates its capabilities by completing over 200 tasks within Minecraft, an environment known for its complexity and vast number of possible tasks. Notably, JARVIS outperforms previous models in long-term tasks, achieving a success rate that is five times higher in the ObtainDiamondPickaxe task. This improvement highlights the potential of memory-augmented MLMs in managing complex, sequential decision-making processes over extended periods.

Theoretical and Practical Implications

Theoretically, this research suggests that the fusion of multimodal inputs with adaptive memory systems can significantly enhance the problem-solving capacity of AI agents in open-world environments. From a practical perspective, such advancements could stimulate ongoing developments in AI models designed for real-world applications where adaptability and long-term planning are crucial.

Future Directions

The success of JARVIS opens several avenues for future research. One critical area involves further exploration and refinement of multimodal memory mechanisms to improve sequential decision-making processes. Another potential direction is the expansion of JARVIS's capabilities beyond Minecraft to other open-world scenarios, which could involve more complex interactions and richer environments.

In summary, the paper presents a well-rounded and innovative approach to tackling the challenges inherent in open-world AI, providing a compelling blueprint for future developments in this rapidly evolving field. As AI systems continue to advance, integrating adaptable, memory-augmented models like JARVIS could become a cornerstone in the development of intelligent agents capable of navigating and mastering complex real-world environments.