Overview of "u-LLaVA: Unifying Multi-Modal Tasks via LLM"

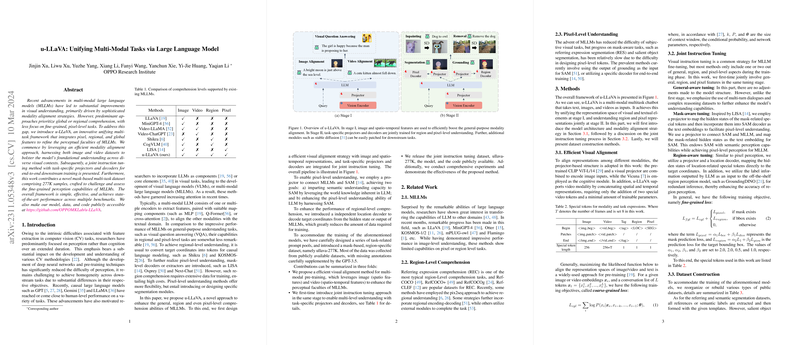

This paper introduces u-LLaVA, a novel framework designed to improve the comprehension abilities of Multi-Modal LLMs (MLLMs) by integrating pixel, regional, and global features. While prior methods in MLLMs have significantly advanced visual understanding, they often lack granularity in pixel-level tasks. The u-LLaVA stands out by adopting a unified multi-task approach that harmonizes various feature levels to enhance the perceptual faculties of these models.

Model Architecture and Methodology

u-LLaVA employs a two-stage process for aligning and calibrating its comprehensive visual understanding capabilities:

- Efficient Visual Alignment: This initial stage focuses on aligning representations from different modalities. Utilizing a projector-based structure, u-LLaVA combines the pre-trained CLIP ViT-L/14 with a visual projector to process image inputs. It also extends support to video modalities through spatio-temporal feature concatenation, enhancing the general-purpose modality alignment efficiency. The alignment is driven by a coarse-grained loss function designed to maximize the likelihood of text conditioned on image/video embeddings.

- Joint Instruction Tuning: The second stage introduces a novel approach for simultaneous tuning across diverse levels: general, pixel, and regional features. This stage emphasizes multi-turn dialogues and complex reasoning datasets to foster deeper understanding. A mask-aware tuning mechanism is employed, leveraging a projector to facilitate pixel-level segmentation through mappings into Stable Diffusion models. For regional comprehension, a location decoder is utilized to decode target coordinates, which aids in precise feature comprehension.

Data and Training

A unique contribution of this work is the creation of ullava-277K, a novel mask-based multi-task dataset comprising 277K samples. This dataset is meticulously designed to challenge and expand the fine-grained perception capabilities of MLLMs. Training through carefully constructed data, the method uses a combination of efficiency in task-specific alignment and instruction-tuning techniques.

Results and Implications

The empirical results demonstrate that u-LLaVA achieves state-of-the-art performance across multiple benchmarks, particularly in tasks requiring fine-grained visual comprehension. It outperforms existing MLLM approaches in key benchmarks such as RefCOCO, RefCOCO+, DUT-OMRON, and others, indicating its significant advancement in regional and pixel-level tasks.

These findings imply that integrating unified modality alignment with joint instruction tuning can substantially elevate the perceptual abilities of MLLMs. The successful implementation of this approach also opens avenues for further research in AI, particularly in improving modality fusion techniques and designing efficient large-scale multi-task training frameworks.

Future Prospects

Looking ahead, the u-LLaVA framework sets a precedent for future work in advancing the capabilities of MLLMs. Possible future directions include refining the alignment strategies, expanding the dataset to cover more complex visual scenarios, and optimizing the balance between general and task-specific training to ensure broader applicability and continued performance enhancement across various domains.

In conclusion, the u-LLaVA framework represents a significant step forward in the ongoing development of more perceptive and versatile multi-modal LLMs. Its approach to integrating pixel, regional, and global tasks into a cohesive framework presents a compelling model for future exploration and advancement in the field of AI and machine learning.