Analysis of "MFTCoder: Boosting Code LLMs with Multitask Fine-Tuning"

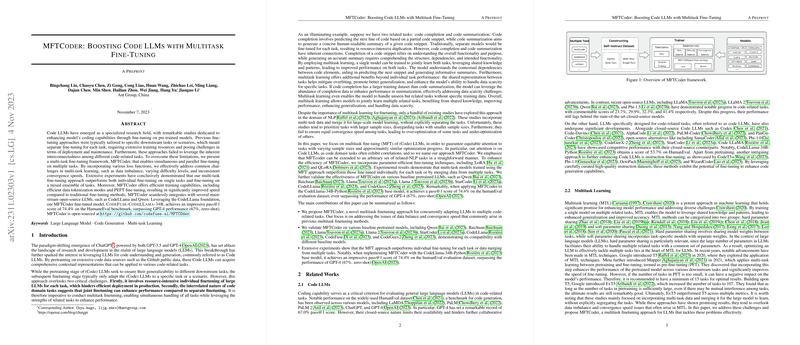

The paper "MFTCoder: Boosting Code LLMs with Multitask Fine-Tuning" presents a comprehensive exploration of a multitask fine-tuning framework for LLMs in the domain of code generation. The work addresses the limitations of traditional fine-tuning approaches, which often require separate tuning for each task, incurring substantial computational costs and failing to exploit the interconnectedness of code-related tasks. The authors propose MFTCoder, a framework that enables concurrent fine-tuning across multiple tasks, ensuring efficient deployment and enhanced performance.

Methodology

MFTCoder leverages a multitask fine-tuning approach, incorporating various loss functions to handle issues such as data imbalance, differing task difficulties, and convergence speed disparities. The method effectively combines multiple tasks, providing a unified training framework that is both resource-efficient and performance-oriented.

The authors employed several techniques to enhance MFTCoder's efficiency:

- Instruction Dataset Construction: Utilizing self-instruct methodologies and agent-driven conversations to automate the creation of training datasets.

- Efficient Tokenization Modes: Implementing dynamic padding and packing strategies to minimize padding token overhead and improve training speed.

- Parameter-Efficient Fine-Tuning (PEFT): Adopting LoRA and QLoRA techniques to reduce the parameter load and enable fine-tuning of extensive models on limited resources.

- Balanced Loss Functions: Designing varying loss functions to ensure balanced training among tasks, specifically addressing data imbalances and convergence issues.

Experimental Evaluation

The efficacy of MFTCoder was demonstrated through extensive experiments on 7 different models across 5 diverse code-related tasks, including code completion, text-to-code generation, code comment generation, code translation, and unit test generation. Key findings include:

- MFTCoder outperformed individually fine-tuned models (SFT-S) and models fine-tuned on mixed tasks (SFT-Mixed).

- Notably, the MFT-trained model demonstrated superior generalization capabilities on unseen tasks, such as text-to-SQL generation.

- On the HumanEval benchmark, MFTCoder's CodeFuse-CodeLLama-34B achieved a pass@1 score of 74.4%, exceeding the performance of GPT-4 (67%, zero-shot).

Implications and Future Directions

The results illustrate that multitask fine-tuning using MFTCoder significantly boosts the performance of code LLMs, offering a scalable and efficient solution for handling multiple code-oriented tasks. This work has profound implications for both theoretical research and practical applications in AI, suggesting that multitask frameworks could usher in more flexible, robust, and comprehensive AI systems.

Future developments may focus on refining task division strategies, exploring more adaptive multitask optimization techniques, and extending the framework to broader NLP and AI domains. Additionally, further investigation into the balancing of convergence speeds across diverse tasks could enhance adaptability and efficiency.

In summary, this paper lays a solid foundation for improved multitask learning practices in AI, providing a detailed roadmap for enhancing code LLM capabilities through finely-tuned, comprehensive training regimes.