Bias in Evaluating Profoundness: An Analysis of LLMs

The proliferation of LLMs such as GPT-4 has sparked interest in their potential to emulate human-like reasoning capacities. This paper scrutinizes the performance of GPT-4 and several other LLMs in discerning the profundity of mundane, motivational, and pseudo-profound statements, providing a nuanced understanding of their capabilities and limitations.

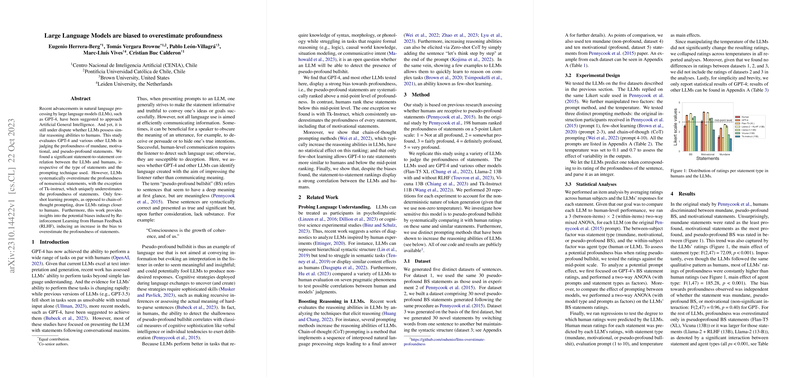

Main Findings

The research reveals a noteworthy correlation between human and LLM judgments regarding statement profundity, despite variances in the nature of the statements and prompt styles applied. It highlights two key biases: LLMs are inclined to overestimate the profoundness of nonsensical statements, contrary to human evaluators who typically rate such statements below the midpoint of a Likert scale. Interestingly, Tk-Instruct stands out as an anomaly, consistently underestimating profoundness across all statement types, suggesting a distinct alignment behavior possibly due to its extensive task fine-tuning.

The methodology employed few-shot learning and chain-of-thought (CoT) prompting to explore whether these techniques could modulate LLMs' assessments to more closely align with human judgments. Notably, few-shot learning yielded ratings resembling those of human evaluators more accurately than other prompting techniques, while CoT prompting had negligible impact.

Statistical Analyses

A rigorous statistical examination was conducted, involving multiple analysis of variance (ANOVA) tests to discern biases and prompting effects. The results confirmed the initial supposition: GPT-4 and other LLMs tend to overestimate the profundity of pseudo-profound statements, diverging significantly from human evaluators. However, the strong correlation in rank order between human and LLM assessments suggests LLMs, despite their bias, do recognize qualitative differences across statement types.

Theoretical and Practical Implications

This paper underscores an essential limitation of LLMs—susceptibility to overestimating profoundness, attributed potentially to training data characteristics or reinforcement learning from human feedback (RLHF) processes. The implications are vast: LLMs' propensity to misjudge nonsensical statements as meaningful may impact their deployment in domains necessitating nuanced communication and understanding, such as AI assistants, automated content moderation, and educational tools.

The discovery that RLHF could intensify bias prompts questions about current LLM alignment methodologies and their unintended consequences. This finding informs the need for refined RLHF strategies that mitigate such biases while enhancing model interpretability and trustworthiness.

Future Directions

This analysis opens avenues for future research aimed at refining prompting techniques and learning strategies to attenuate biases. An exploration into additional models and prompt configurations, including advanced tree of thought methods, could provide further insights into optimizing LLM performance. Moreover, robust examination of training regimens that shape model biases will be critical in advancing LLM reliability across diverse applications.

Conclusively, the paper highlights the complexity embedded in developing truly human-like AI reasoning, necessitating ongoing scrutiny and innovation in training and operational methodologies. Understanding these models' biases and strengths is pivotal as their roles in society expand, demanding models that are not only syntactically proficient but also semantically discerning and aligned with human judgment.