Essay on "Tuna: Instruction Tuning using Feedback from LLMs"

The paper presented in "Tuna: Instruction Tuning using Feedback from LLMs" explores the refinement of instruction-tuned LLMs like LLaMA, aiming to enhance response quality by leveraging feedback from more advanced models such as Instruct-GPT and GPT-4. This approach improves upon traditional instruction tuning by incorporating innovative methodologies: probabilistic ranking and contextual ranking.

Methodology Overview

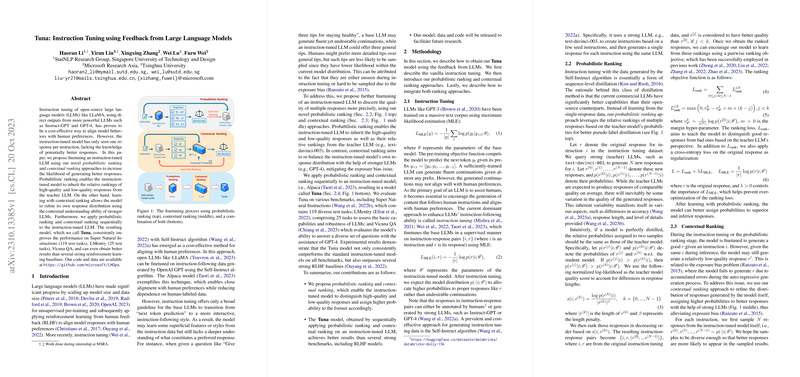

The crux of the methodology is to ameliorate the conventional instruction tuning by incorporating dual ranking strategies. Probabilistic ranking allows the model to observe and inherit the relative rankings of responses based on quality assessments provided by a teacher LLM. The contextual ranking, conversely, empowers the model to adjust its own response distribution by utilizing the comprehension capabilities of stronger LLMs to provide better contextualization.

Probabilistic Ranking: This involves finetuning the model to recognize and rank responses based on a probabilistic framework informed by a teacher LLM's evaluation metrics, thereby ensuring an alignment with higher quality responses.

Contextual Ranking: By generating diverse responses using the model's own capabilities and subsequently evaluating them with GPT-4, the responses can be ranked based on various dimensions such as accuracy, clarity, and depth. This mitigates exposure bias, enhancing the model's capacity to generate more desirable outputs.

Ultimately, the integration of these approaches in a sequential manner produced the model named Tuna, which combines the strengths of both probabilistic and contextual rankings.

Experimental Evaluation

The empirical assessments conducted were robust. The Tuna model exhibited consistent enhancement in performance across a range of benchmarks: Super Natural Instructions, LMentry, and Vicuna QA. Specifically, the model demonstrated superiority over baseline instruction-tuned models and even several reinforcement learning baselines. Key findings indicate that the Tuna model not only generates responses with higher fidelity to human preferences but also sustains its proficiency across diverse task categories.

Theoretical and Practical Implications

From a theoretical standpoint, this paper suggests that the amalgamation of ranking-based finetuning strategies significantly boosts model alignment with human-like responses without escalating computational costs substantially. Practically, this approach could streamline applications requiring nuanced LLM interactions, such as dialogue systems or complex decision-support systems.

Future Directions

The paper opens avenues for further exploration in both scaling the model to larger datasets and enhancing the robustness of the ranking mechanisms using alternative evaluation metrics. Furthermore, expanding this approach to encompass multilingual capabilities could provide broader applicability across global contexts.

In summary, the "Tuna" model exemplifies a refined approach to instruction tuning by successfully leveraging multi-faceted ranking processes, accentuating its potential to fine-tune open-source LLMs cost-effectively while narrowing the performance gap with high-capacity commercial LLMs. This innovative work marks a strategic enhancement in LLM development, aligning with both contemporary research trends and practical deployment needs in artificial intelligence.