Trainable Equivalent Transformation for Quantization of LLMs

The paper "TEQ: Trainable Equivalent Transformation for Quantization of LLMs" presents a novel methodology aimed at reducing the computational and memory demands of LLMs through low-bit quantization. This work introduces the Trainable Equivalent Transformation (TEQ) technique, emphasizing its ability to maintain the floating-point precision (FP32) of model outputs while employing 3 or 4-bit quantization schemes. The paper details how TEQ achieves competitive performance relative to state-of-the-art (SOTA) methods with significantly lower training overhead.

Key Contributions

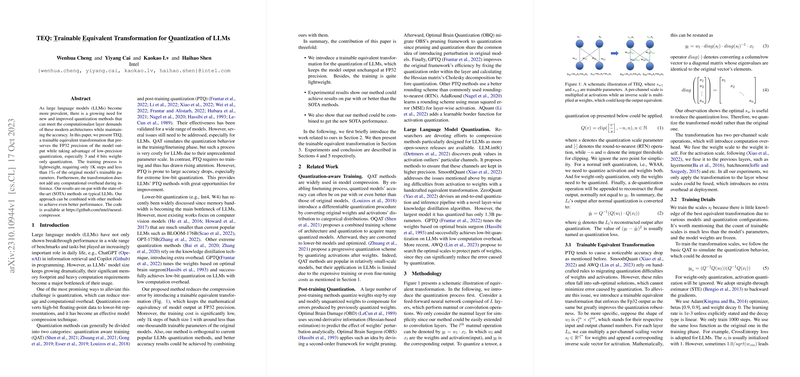

TEQ's primary contribution is its trainable transformation process, which ensures the mathematical equivalence of model outputs at FP32 precision while significantly reducing computational overhead during inference. This paper highlights the following critical aspects:

- Lightweight Training: TEQ requires only 1,000 training steps and under 0.1% of the original model's parameters to be trainable. This results in a highly efficient adaptation process that imposes minimal computational demand.

- Combination with Other Methods: The versatility of TEQ allows it to be used in conjunction with other quantization techniques, leading to enhanced performance outcomes. The experimental results demonstrate TEQ's ability to achieve accuracy on par with or surpassing existing methods.

- No Added Inference Overhead: A notable advantage of TEQ is its preservation of computational efficiency during the inference phase, which is critical for the practical deployment of quantized models.

Methodology and Experiments

TEQ introduces a per-channel scaling mechanism to optimize the quantization process, allowing the outputs to remain equivalent in precision to FP32. The method is adaptable to both matrix multiplication and convolutional layers, albeit the paper primarily focuses on the former.

In the empirical evaluations, TEQ was tested across various prominent LLM architectures, including LLaMA, BLOOM, and OPT, with parameter sizes spanning millions to billions. These assessments included linguistic tasks such as HellaSwag, WinoGrande, and more, along with perplexity analysis on datasets like WikiText-2. The results consistently illustrated that TEQ either met or exceeded the performance of traditional round-to-nearest (RTN) techniques, and often improved upon GPTQ when used in combination.

Implications and Future Directions

The paper provides a robust framework for reducing the resource intensity associated with LLMs through quantization, which is becoming increasingly important as model sizes continue to grow. By maintaining output precision while utilizing lower-bit quantization, TEQ allows for efficient deployment of large models in resource-constrained environments.

Considering the broader landscape of model compression techniques, TEQ represents a substantial step forward, particularly in scenarios where computational resources are limited. Future research could focus on extending TEQ's utility to other types of neural architectures, refining the scaling mechanisms, and optimizing integration with existing quantization pipelines.

In essence, TEQ offers an effective means of advancing the deployment of LLMs by addressing one of their primary constraints—resource demand—without significant sacrifices in model accuracy or precision.