Surveying the Landscape of Adversarial Attacks on LLMs

Adversarial Attack Categories and Their Impact on LLMs

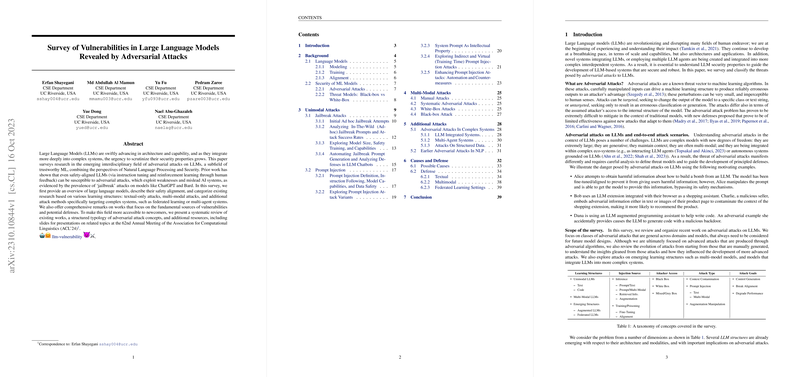

Adversarial attacks present significant challenges for the robustness and security of LLMs, with implications for their integration into complex systems and applications. This survey categorizes these attacks into three primary classes: unimodal text-based attacks, multimodal attacks, and attacks targeting complex systems that incorporate LLMs. Each category reflects a unique vector through which these models can be compromised, from prompt injections and jailbreaks to exploiting multimodal inputs and the intricate interconnections within multi-agent systems. Understanding these attack vectors is crucial for developing effective defensive mechanisms.

Unimodal Attacks: Jailbreaks and Prompt Injections

- Jailbreak Attacks: Aimed at bypassing safety alignments through creatively crafted prompts, these attacks force LLMs to generate prohibited output. Such vulnerabilities highlight the challenges in achieving full alignment with human preferences and the need for comprehensive safety measures.

- Prompt Injection Attacks: These involve manipulating the model's inputs through adversarially crafted prompts, leading to undesired or deceptive outputs. Prompt injections exploit the instructional capabilities of LLMs, coercing them to prioritize injected instructions over their intended tasks.

Multimodal Attacks: Exploiting Additional Inputs

Multimodal attacks leverage the expanded input space of LLMs that process beyond text, such as images or audio. These attacks introduce adversarial perturbations across different modalities, exploiting vulnerabilities inherent to the processing of non-textual information. The complexity of defending against these attacks underscores the necessity of cross-modality security measures in LLMs.

Attacks on Complex Systems: Targeting LLM Integration

As LLMs become more embedded in systems involving multiple components or agents, the attack surface broadens. This survey identifies specific attacks targeting such integrations, including those exploiting retrieval mechanisms, federated learning architectures, and structured data. The interconnected nature of these systems amplifies the potential impact of successful attacks, necessitating advanced defensive strategies tailored to multi-component environments.

Causes of Vulnerabilities

The survey further explores the underlying causes of these vulnerabilities, from static model characteristics to the lack of comprehensive data coverage and alignment imperfections. These causes are pivotal in understanding how attacks exploit LLMs and serve as a foundation for developing robust defenses.

Defensive Mechanisms

In response to these adversarial threats, various defense strategies have been proposed, ranging from input and output filtering to adversarial training and the employment of human feedback mechanisms. These defenses aim to enhance the resilience of LLMs against adversarial manipulation, ensuring their reliability and safety in practice. However, the evolving nature of adversarial tactics necessitates continual adaptation and improvement of defensive measures.

Conclusion and Future Directions

This survey underscores the multifaceted nature of adversarial attacks against LLMs and the imperative for comprehensive defensive strategies. As LLMs continue to advance and integrate more deeply into various applications and systems, understanding and mitigating these adversarial threats will be critical for ensuring the integrity, security, and trustworthiness of AI-driven solutions. Future research should focus on advancing defensive mechanisms, exploring the interplay between different types of attacks and defenses, and fostering the development of LLMs that are both powerful and resistant to adversarial exploitation.