An Analysis of Label-Supervised LLaMA Finetuning

The research paper provides an in-depth examination of a novel approach to finetuning LLMs, specifically employing label-supervised adaptation for enhancing sequence and token classification tasks. LLMs such as GPT-3, GPT-4, and LLaMA have displayed robust language understanding and generation abilities, but their aptitude in sequence and token classification has been less remarkable. This paper introduces Label Supervised LLaMA (LS-LLaMA) and a variant, Label Supervised unmasked LLaMA (LS-unLLaMA), to address these challenges.

Finetuning LLMs typically involves instruction-tuning, focusing on training LLMs to comprehend and complete tasks through natural language instructions. However, this paper argues that instruction-tuning may underperform in classification tasks where precise label prediction is required. Previous observations indicate that instruction-tuned LLMs often did not surpass the performance of traditional models like BERT in classification tasks. The proposed label-supervised adaptation aims to finetune models with specific discriminant labels using LLaMA-2-7B.

Methodological Insights

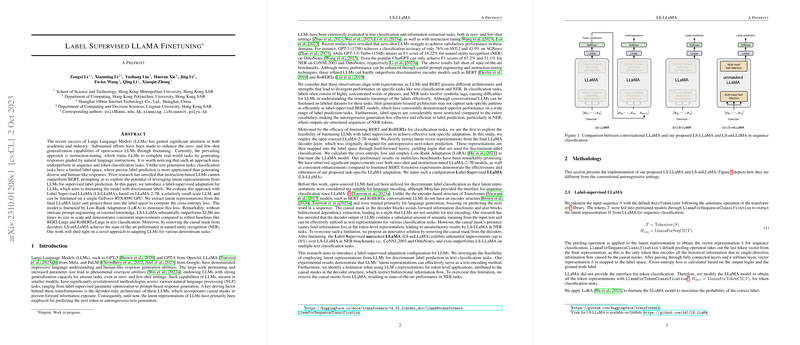

The LS-LLaMA methodology involves extracting latent representations from the final LLaMA layer and projecting them into the label space to calculate cross-entropy loss, which is minimized through Low-Rank Adaptation (LoRA). This approach allows for significant improvements in text classification tasks, even outperforming LLMs an order of magnitude larger and distinguished baselines like BERT and RoBERTa.

Furthermore, the paper introduces LS-unLLaMA, which removes the causal mask from the LLM's decoders, thereby enhancing the model's capacity for token-level applications such as named entity recognition (NER). The ensuing results in NER tasks are particularly compelling, with LS-unLLaMA achieving state-of-the-art performance, a testament to the importance of global token dependencies afforded by bidirectional self-attention.

Experimental Results and Implications

The experimental analysis underscores the efficacy of the label-supervised adaptation approach over traditional instruction-tuning and baseline discriminative models. The LS-LLaMA outperformed several baseline models across various text classification tasks, demonstrating the versatility and robustness of the proposed method. Notably, LS-LLaMA achieved better results on datasets with more complex label requirements, such as SST5 and domain-specific classifications.

In the field of token classification, LS-unLLaMA exhibited substantial advancements. By addressing the limitations inherent in autoregressive token generation, where the causal mask inhibits bidirectional context gathering, the unmasking provided significant performance enhancements in NER tasks.

Conclusions and Future Prospects

This research invites a reconsideration of finetuning strategies for LLMs, emphasizing the potential of label-supervised adaptation to transcend the limitations of traditional model tuning techniques. The findings here suggest promising directions for enhancing LLM efficacy in specific applications beyond sequence and token classification.

Looking forward, further exploration of label-supervised adaptations on a broader array of LLM architectures could yield valuable insights into model efficiency and performance. Additionally, addressing scalability issues through further fine-tuning of larger LLMs under label supervision could unveil opportunities for more sophisticated applications in natural language processing and beyond.

Overall, the proposed LS-LLaMA and LS-unLLaMA approaches contribute meaningfully to the discourse surrounding LLM application and optimization, paving the way for more refined and task-specific model tuning methodologies in artificial intelligence.