Overview of UniAudio: A Universal Audio Foundation Model

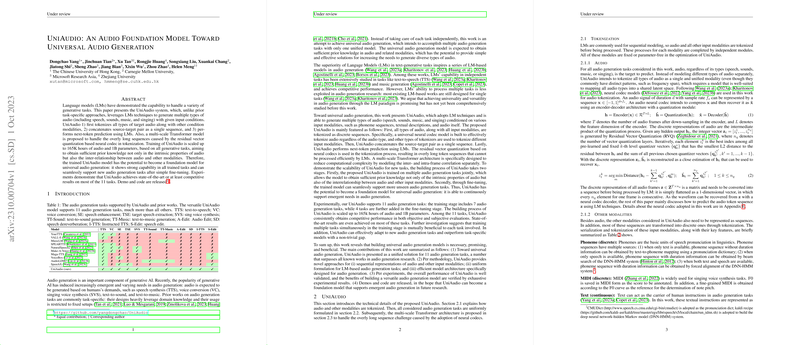

The paper presents UniAudio, a model designed to achieve universal audio generation by leveraging techniques from LLMs. UniAudio uniquely positions itself within the generative AI landscape by enabling multi-modal audio generation tasks, including speech, sounds, music, and singing, under a unified framework. This model capitalizes on generative knowledge across diverse audio types, conditioned on inputs like phoneme sequences, textual descriptions, and other audio modalities.

Methodology

UniAudio's approach can be summarized through three key innovations:

- Universal Tokenization: All input modalities are tokenized into discrete sequences. A universal neural codec model achieves this by mapping different audio types into a shared latent space. The tokenization efficiency is ensured using residual vector quantization, albeit resulting in long token sequences that are efficiently managed using a multi-scale Transformer architecture.

- Multi-scale Transformer Architecture: To manage these lengthy token sequences, UniAudio implements a global-local Transformer mechanism. The global Transformer addresses inter-frame correlations, while the local Transformer handles intra-frame dependencies, optimizing both computational complexity and sequence processing.

- Unified Task Formulation: The model is structured to process multiple audio generation tasks by concatenating tokenized source and target sequences. This uniform formulation facilitates comprehensive handling across various tasks, enabling efficient model training and inference.

Experiments and Results

UniAudio was trained on a substantial collection of 165,000 hours of audio data, covering a wide array of tasks, and extending to 1 billion parameters. The model was evaluated against 11 tasks across training and fine-tuning stages, demonstrating state-of-the-art or competitive results. Notably, UniAudio exhibited mutual task benefits through its joint training approach, which facilitated enhanced modeling performances compared to task-specific models.

Key experimental outcomes highlighted:

- Text-to-Speech and Voice Conversion: UniAudio achieved superior or comparable results in terms of Word Error Rate (WER) and speaker similarity scores compared to other advanced models like VALL-E and NaturalSpeech 2.

- Speech and Target Speaker Extraction: While achieving high DNSMOS scores, UniAudio's generative techniques outperformed traditional signal-level metrics, highlighting LLM-based generation's advantages distinct from conventional methods.

- Text-to-Sound and Text-to-Music Generation: The model exhibited competitive performance on newly introduced tasks during the fine-tuning phase, demonstrating its scalability and adaptability.

Implications and Future Research

The development of UniAudio suggests significant implications for future audio generation models. It illustrates a paradigm shift towards universal foundations, where seamless support for emerging audio generation needs is possible through fine-tuning. The unified approach fosters broader and more efficient data utilization, offering valuable insights into jointly training diverse tasks under a singular framework.

Looking ahead, the potential extensions of UniAudio could explore incorporating unlabeled data to enhance learning robustness, scaling the foundation model across even more diverse audio generation tasks, and possibly integrating with domain-specific models to fine-grain application-specific enhancements.

In conclusion, UniAudio represents a pivotal advance in audio foundation models, showcasing the power and potential of LLM techniques extended beyond text to the evolving domain of audio. Its release, along with accompanying code and demonstrations, aims to seed further advancements in universal audio generation research.