Adapted LLMs in Clinical Text Summarization: A Comparative Study Against Medical Experts

This paper explores the application of adapted LLMs in clinical text summarization, evaluating their performance against medical experts. The authors investigate the adaptation techniques employed on eight LLMs concerning four distinct clinical tasks: radiology reports, patient questions, progress notes, and doctor-patient dialogue. The main goal is to determine how well these LLMs can summarize clinical text compared to summaries produced by medical experts. This research presents a comprehensive evaluation, leveraging both quantitative NLP metrics and a reader paper with actual clinicians, to gauge the quality of machine-generated summaries.

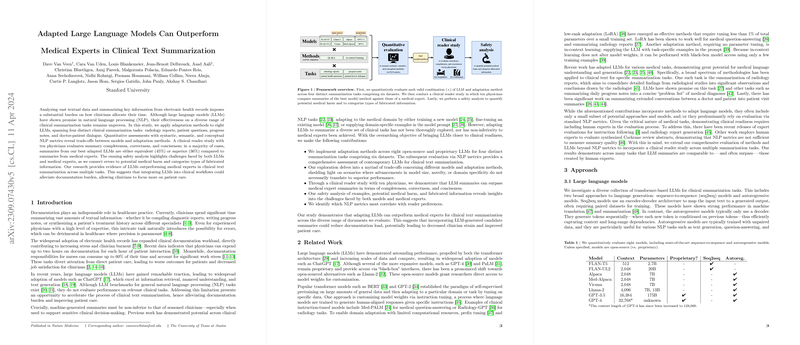

Methodology

The paper employs two primary adaptation methods for LLMs: in-context learning (ICL) and quantized low-rank adaptation (QLoRA). ICL involves including in-context examples directly within the model's prompt without altering model weights, while QLoRA fine-tunes the LLM by altering a small subset of model parameters. The authors test these methods on various models, including both open-source and proprietary ones, such as FLAN-T5, Llama-2, and GPT-4, differentiated by parameters and architectures.

For evaluation, the authors utilize well-established NLP metrics, such as BLEU, ROUGE-L, BERTScore, and MEDCON, to assess the syntactic, semantic, and conceptual quality of generated summaries. Additionally, a clinical reader paper involving physician experts assesses summaries based on completeness, correctness, and conciseness, with potential implications in real-world clinical scenarios.

Results

Quantitative results indicate that adapted LLMs, particularly proprietary models like GPT-4, perform competitively against medical experts when paired with appropriate adaptation strategies. The paper's findings suggest that LLMs generally produced summaries that were more complete, correct, and concise compared to those generated by clinicians. The fine-tuning approach demonstrated notable improvements in model performance, especially in achieving higher accuracy and detail in summaries.

The clinical reader paper substantiated these quantitative metrics, showing that LLM summaries were often preferred by clinicians over those generated by their peers. This finding emphasizes the potential for LLMs to alleviate the documentation burden in clinical workflows, enabling healthcare professionals to redirect their efforts more towards patient care rather than administrative tasks.

Implications and Future Developments in AI

The implications of this research are significant in both theoretical and practical domains. Theoretically, the paper further validates the adaptability and efficacy of LLMs in specialized tasks outside their original training data, demonstrating their utility in domains necessitating domain-specific language understanding. Practically, integrating LLM-generated summaries into clinical workflows could drastically reduce the time burden on clinicians, thereby mitigating stress and potential documentation errors, which can adversely affect patient care.

Looking forward, this paper opens avenues for further research into optimizing LLM adaptation methods for varied and complex clinical datasets, expanding the scope to include more intricate clinical narratives, such as multi-specialty reports and longitudinal patient data. Additionally, developments in LLM architectures with extended context windows could enhance their summarization capacity, enabling full comprehension of longer clinical texts. However, deploying such solutions in healthcare systems will require addressing data privacy concerns and ensuring the transparency and interpretability of model outputs in critical clinical decision-making contexts.

By examining these critical intersections of AI and healthcare documentation, the paper sets a robust foundation for the future application and even widespread adoption of LLMs in clinical settings.