Point-Bind & Point-LLM: Advancing 3D Multi-modal Understanding and Instruction Following

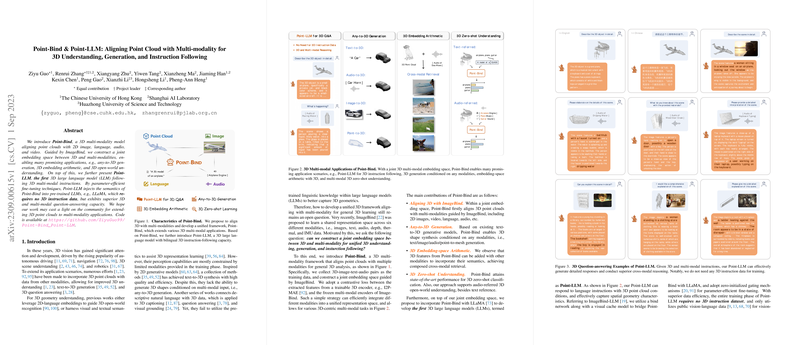

The paper, authored by Ziyu Guo, Renrui Zhang, and others, introduces Point-Bind and Point-LLM, targeting the increasingly critical intersection of 3D point cloud analysis with multi-modal understanding and generation. These innovative models address the integration and alignment of 3D data with other modalities such as images, text, and audio for enhanced 3D comprehension and multi-modal instruction following.

Point-Bind: A Multi-modal Framework for 3D Data

Point-Bind is a comprehensive framework that aligns 3D point clouds with 2D images, text descriptions, and audio signals. Inspired by ImageBind, it constructs a shared embedding space to facilitate diverse 3D-related applications, including 3D generation from any modality, embedding-space arithmetic, and zero-shot 3D understanding.

Data Collection and Alignment Process

The crucial step in Point-Bind’s development is constructing a joint embedding space through contrastive learning. The team curated an extensive dataset comprising 3D-image-text-audio pairs:

- 3D-Image-Text Pairs: Leveraged from the ULIP dataset, which integrates 3D models from ShapeNet with rendered 2D images and text descriptions.

- 3D-Audio Pairs: Collected by mapping ESC-50's audio clips to the corresponding 3D categories from ShapeNet.

- Construction of Multi-modal Pairs: Matching 3D-audio pairs with their corresponding 3D-image-text files resulted in a robust dataset for training.

Using this dataset, Point-Bind employs a trainable 3D encoder alongside ImageBind's pre-trained modality encoders. Contrastive loss is applied to align 3D point clouds with images, texts, and audio within a unified representation space.

Key Contributions and Applications

Point-Bind demonstrates several advanced capabilities:

- Any-to-3D Generation: Beyond existing models limited to text-to-3D synthesis, Point-Bind facilitates 3D shape generation from diverse inputs, including text, images, audio, and other 3D models.

- 3D Embedding-space Arithmetic: The model supports the addition of 3D features with other modalities' embeddings, enabling sophisticated cross-modal retrieval tasks.

- Zero-shot 3D Understanding: Achieves state-of-the-art performance in 3D zero-shot classification, showcasing its robust multi-modal retrieval capabilities.

Point-LLM: 3D LLM for Multi-modal Instruction Following

Building on the foundation established by Point-Bind, Point-LLM is introduced as the first 3D LLM designed to interpret and respond to multi-modal instructions. It leverages parameter-efficient fine-tuning techniques to seamlessly integrate 3D semantics into pre-trained LLMs like LLaMA.

Training and Efficiency

Point-LLM's training emphasizes data- and parameter-efficiency:

- No Need for 3D-specific Instruction Data: Instead, it utilizes public vision-language data, relying on the pre-trained embedding space to generalize the learned alignment to 3D data.

- Efficient Fine-tuning Techniques: Involves zero-initialized gating mechanisms, LoRA, and bias-norm tuning, significantly reducing the need for extensive parameter adjustments.

Practical Applications

Point-LLM excels in multi-modal reasoning and detailed response generation:

- 3D Question Answering: Generates descriptive answers to complex 3D-instructional queries without requiring 3D instruction-specific training data.

- 3D and Multi-modal Reasoning: Effectively integrates supplementary modality inputs (images or audio) to enhance the response quality.

Experimental Results

The experimental section underscores the significant advancements brought by Point-Bind and Point-LLM:

- 3D Cross-modal Retrieval: Point-Bind outperformed prior models like ULIP and PointCLIP-V2 across various retrieval tasks, demonstrating superior alignment and retrieval efficacy.

- Embedding-space Arithmetic: Successfully illustrated the capability to combine embeddings of different modalities (e.g., 3D point clouds and audio) for composed cross-modal queries.

- Any-to-3D Generation: Highlighted the generation of high-quality 3D models from diverse modals, showcasing the flexibility of the joint embedding space.

- Zero-shot 3D Classification: Point-Bind achieved record levels of accuracy, evidencing robust open-world recognition capabilities.

Conclusion and Implications

Point-Bind and Point-LLM represent significant strides in the multi-modal 3D understanding domain. These innovations not only enhance current capabilities in 3D generation and retrieval but also pave the way for sophisticated multi-modal instruction-following, offering considerable implications for the development of versatile AI systems. Future work will aim to extend these models to adapt more diverse 3D datasets and broader application scopes, enriching the potential for multi-modal AI applications.