Understanding the Role of Knowledge Neurons in Multilingual PLMs

In the paper titled "Journey to the Center of the Knowledge Neurons: Discoveries of Language-Independent Knowledge Neurons and Degenerate Knowledge Neurons," the authors investigate the mechanisms underlying the storage of factual knowledge within multilingual pre-trained LLMs (PLMs). The paper introduces advanced methodologies for localizing knowledge neurons and provides substantial insights into their configurations and functionalities.

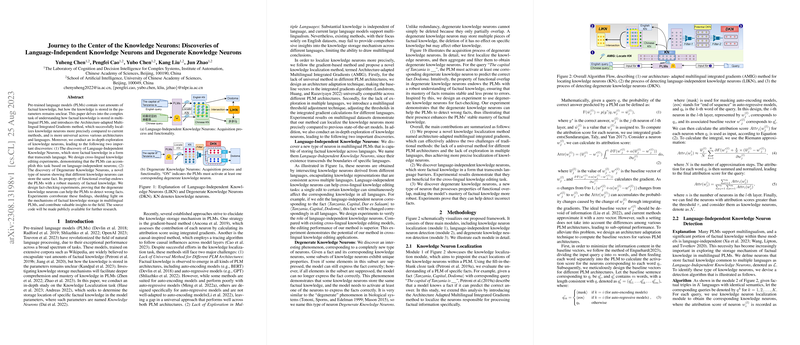

The authors propose the Architecture-adapted Multilingual Integrated Gradients (AMIG) method, addressing two central challenges in knowledge localization: creating a universal method compatible with diverse PLM architectures, and extending the exploration to multilingual contexts. AMIG builds upon the traditional integrated gradients approach, introducing new baseline vectors to enhance compatibility with various architectures such as auto-encoding (e.g., BERT) and auto-regressive models (e.g., GPT). The adaptation of these vectors is pivotal in effectively identifying knowledge neurons across multiple languages, overcoming the limitations of existing methods.

Key Discoveries

- Language-Independent Knowledge Neurons (LIKN): The paper identifies neurons capable of storing factual knowledge that transcends language barriers. These neurons are found by intersecting the localized knowledge neurons from different languages, indicating a universal storage mechanism within PLMs. This discovery is validated through cross-lingual knowledge editing experiments, which show that adjusting one LIKN neuron can simultaneously manipulate facts across multiple languages, enhancing the efficacy of cross-lingual editing tasks.

- Degenerate Knowledge Neurons (DKN): The authors introduce the notion of degenerate knowledge neurons, which exhibit functional overlap, meaning multiple neurons can store identical factual knowledge. This principle mirrors the degeneracy observed in biological systems. The presence of DKNs contributes to the robust mastery of factual knowledge in PLMs, as evaluated by fact-checking experiments. These experiments demonstrate DKNs' utility in enhancing PLMs' stability and precision in recognizing and correcting erroneous facts.

Experimental Validation and Implications

Empirical analysis is conducted using multilingual versions of BERT and GPT models, revealing notable differences in the localization of knowledge neurons and their distributions across layers. Results indicate that LIKN and DKN enhance cross-lingual knowledge editing and fact-checking abilities, offering a framework for improved model reliability and accuracy.

- The AMIG method provides improved precision in knowledge neuron localization, with a substantial increase in success rates observed in Chinese datasets.

- The discovered LIKNs contribute to significant advancements in cross-lingual tasks, outperforming existing methods by streamlining redundant computational processes.

- The DKN detection process, applicable even in monolingual models, highlights the inherent robustness in PLMs' factual knowledge storage, underpinning practical applications in autonomous fact-checking without reliance on external databases.

Future Prospects

This research potentially shifts the paradigm in understanding and improving PLMs by showcasing the intricate architecture of knowledge storage mechanisms. Future work could expand upon these findings to explore their implications for enhancing model interpretability and reducing biases. Additionally, the methodologies could serve as the foundation for developing innovative training schemes targeting specific architectural facets of PLMs, thereby advancing their intrinsic capabilities across diverse applications.

In conclusion, the discoveries outlined in this paper underscore significant theoretical contributions and potential practical applications by revealing nuanced understandings of knowledge neuron functionalities, meriting further exploration and development within the AI research community.