Overview of Large Audio Models: A Survey and Outlook

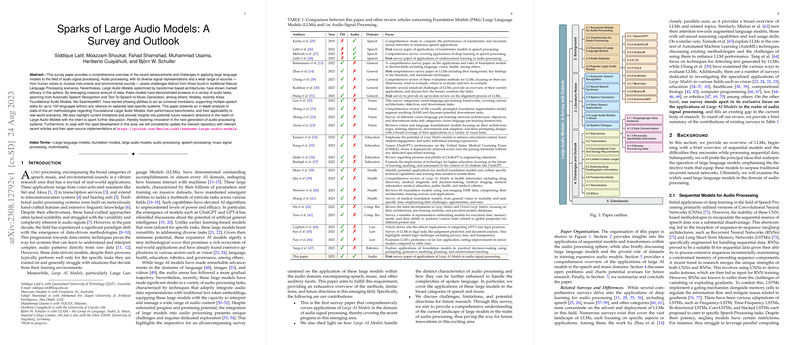

The paper, "Sparks of Large Audio Models: A Survey and Outlook," provides an in-depth exploration of recent advancements in applying LLMs to audio signal processing. These models, driven primarily by transformer architectures, have demonstrated impressive capabilities across various audio tasks including Automatic Speech Recognition (ASR), Text-to-Speech (TTS), and Music Generation.

Key Contributions

- State-of-the-Art Audio Models: The paper discusses foundational audio models such as SeamlessM4T, which function as universal translators capable of performing multiple speech tasks across over 100 languages without task-specific systems. This represents a significant leap in multimodality integration within AI systems.

- Performance Benchmarks and Methodologies: The authors meticulously analyze state-of-the-art methods and performance benchmarks. Through rigorous evaluation, they demonstrate the applicability of these large audio models in real-world scenarios, highlighting their strengths in scalability and versatility.

- Identification of Challenges: Current limitations include handling diverse signal representations, managing data variability, and ensuring model robustness across different audio sources. The paper also addresses the emergent challenges of integrating these abilities for real-world applications.

- Future Directions: The authors provide insights into potential research avenues to enhance Large Audio Models, fostering innovation and addressing existing challenges. These include improving data handling, refining transformer architectures, and better integration across audio and language tasks.

Implications and Future Outlook

The integration of LLMs into audio processing delineates a new frontier in AI, with profound implications for industries reliant on speech and music technologies. The ability to handle various audio tasks with foundational models reduces the complexities associated with multiple task-specific systems, streamlining processes and enhancing efficiency.

Practical Implications:

- ASR and TTS: Robust models like SeamlessM4T can drastically enhance the performance of voice assistants, transcription services, and real-time translation devices. The paper suggests that these models could significantly impact sectors such as telecommunications, healthcare, and virtual assistance.

- Music and Sound Generation: With models like AudioLM and MusicGen, the potential for AI-driven creativity in music production could be transformative, suggesting new pathways in digital media and entertainment industries.

Theoretical Implications:

- Multimodal Learning: As these models continue to advance, the theoretical understanding of multimodal interactions between text and audio will deepen, potentially leading to more holistic AI systems capable of seamless cross-modal understanding and generation.

- Language and Audio Interaction: Understanding the nuanced interactions between language and audio signals can lead to enhanced generalization capabilities across different AI domains, pushing the boundaries of existing models.

Speculation on Future Developments:

Future developments could see even greater integration of AI across modalities, leading to systems that not only understand but also generate nuanced multimedia content. As foundational models become more sophisticated, their emergent abilities could introduce breakthroughs in artificial general intelligence.

Conclusion

The paper underscores the transformative potential of Large Audio Models in redefining audio signal processing, bringing forth a comprehensive overview of current methodologies, challenges, and future directions. It serves as a crucial resource for researchers aiming to navigate and contribute to this rapidly evolving landscape, emphasizing the importance of continual updates and community engagement to foster innovation.