Large Multilingual Models Pivot Zero-Shot Multimodal Learning across Languages

The paper "Large Multilingual Models Pivot Zero-Shot Multimodal Learning across Languages" addresses the challenge of extending the success of multimodal learning beyond the predominantly English-centric frameworks currently prevailing in AI research. The authors of this work propose a novel training paradigm called MpM, aimed at enabling large-scale multimodal models to perform effectively across non-English languages, with a particular focus on low-resource settings.

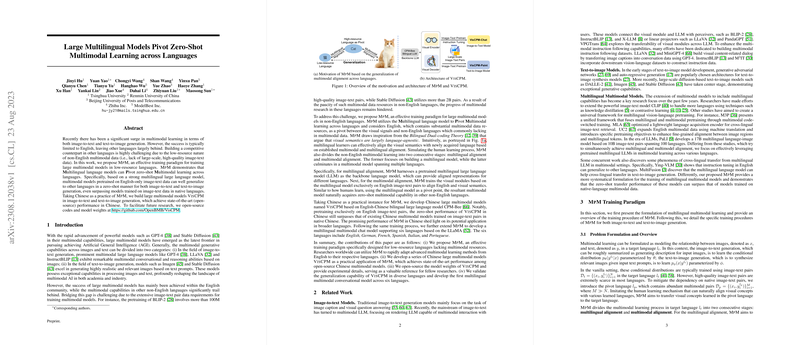

Overview of MpM

The MpM paradigm revolves around leveraging existing English-focused multimodal data to enable learning in other languages through a multilingual pivot. This approach draws on the principles of Bilingual Dual-coding Theory, arguing that visual semantics are largely language-agnostic. MpM divides the learning process into two stages: multilingual alignment and multimodal alignment. In the first stage, a multilingual LLM is employed to establish cross-lingual connections. The subsequent stage aligns image-text data in English to train the visual components of the model.

The paper provides a comprehensive structure for training multilingual multimodal models and demonstrates that these models can surpass those trained directly on native-language multimodal data. This insight is pivotal, as it offers a method for transferring visual learning across languages efficiently.

VisCPM: A Practical Implementation

In practice, the researchers develop VisCPM, a series of large-scale multilingual models leveraging MpM—specifically trained for Chinese. The experiments encompass both image-to-text and text-to-image tasks, showcasing the efficacy of the proposed approach. The results indicate state-of-the-art performance in Chinese, even in comparison to models trained on Chinese-specific multimodal datasets.

- Image-to-Text: The paper details a training setup wherein the VisCPM model is pre-trained on English image-text pairs and fine-tuned on bilingual instruction tuning datasets. The architecture uses connections between visual modules and multilingual LLMs, highlighting how multilingual learners can naturally adapt visual semantics to new languages.

- Text-to-Image: Using a UNet-structured decoder, VisCPM is trained to generate images from text prompts. Interestingly, the model is able to perform competitively in generating images across languages without requiring language-specific fine-tuning data.

Strong Numerical Results

The empirical evaluations of VisCPM underscore its effectiveness. The models show competitive performance against other language-specific models in various benchmarks, including LLaVA Test Set and UniMM-Bench. VisCPM-Chat, for instance, outperforms several existing multilingual chat models across different tasks in both English and Chinese.

Theoretical Implications

The authors suggest that visual semantics' language-agnostic nature plays a significant role in model generalization. This finding could reshape multilingual multimodal research, which traditionally relied heavily on language-specific datasets and models.

Future Directions

The potential for extending MpM's application to additional languages is significant. The researchers demonstrate how using different multilingual LLMs can foster a broader range of language support, with German, French, Spanish, Italian, and Portuguese being among the initial targets beyond Chinese. This flexibility in extending model capabilities presents exciting opportunities for universal LLM development.

Conclusion

In summary, this paper presents a methodologically sound and practically viable approach to multilingual multimodal learning. By leveraging multilingual LLMs as a pivot point, MpM provides a compelling framework to extend AI's reach beyond English. This research holds promise for accelerating the adoption and adaptation of AI technologies across diverse linguistic landscapes, fostering a more inclusive AI ecosystem.