Introduction

LLMs have carved out a formidable niche in the world of AGI research, offering expansive capabilities that extend far beyond mere text generation. These sophisticated models, which draw from substantial volumes of training data, have been successful in demonstrating a keen grasp of common sense knowledge, allowing them to interact and make decisions in real time. Yet, a substantial share of research has been directed at leveraging LLMs to perform tasks individually rather than in cooperation with others. A newly introduced framework aims to bridge this gap—ProAgent harnesses the power of LLMs, integrating proactive and cooperative capacities to interact constructively with partner agents.

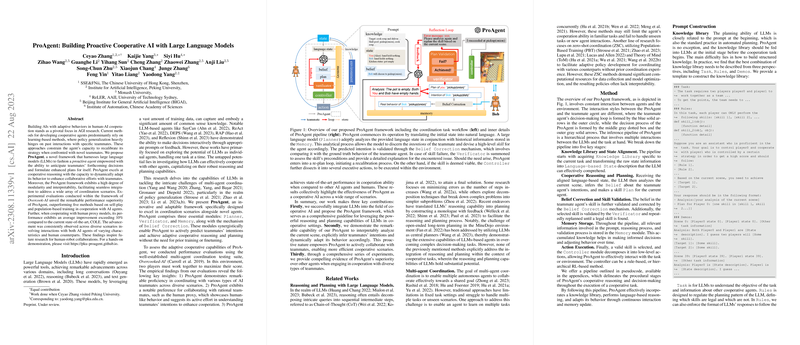

Cooperative Framework

ProAgent marks a departure from traditional approaches that heavily rely on historical data for policy generalization. Instead, it proactively anticipates the actions of teammate agents, facilitating the formation of coherent and actionable plans. One of the cornerstone features of ProAgent is its cooperative reasoning, which allows it to adjust dynamically to the decisions of other agents. The framework possesses elements of modularity and interpretability, which make integration into various coordination scenarios seamless. An array of experiments within the Overcook-AI environment signifies ProAgent's superior performance over existing methods, showcasing, on average, a ten percent improvement when cooperating with counterparts that mirror human behavior.

Design and Modules

ProAgent consists of several central modules: Planner, Verificator, Memory, and a Belief Correction mechanism. These modules work in unison to empower ProAgent with adaptable cooperative reasoning and planning. The Planner is involved in analyzing a given situation and predicting teammate intentions, which subsequently informs the agent's skill planning. In cases where predicted skills are not viable, the Verificator steps in, providing insight into why certain skills fail and prompting a re-plan. The Memory module captures the trajectory of actions and analyses, aiding the continuous refinement of ProAgent's behavior. Belief Correction further intensifies this refinement by aligning the agent's belief with the actual behaviors of teammates.

Experiments and Contributions

ProAgent was put to the test in a multi-agent coordination suite known as Overcooked-AI, where it demonstrated its proficiency in aligning with various types of AI teammates and exhibited a preference for working with teammate models that emulate human behavior. These experiments underline ProAgent's comprehensive contributions to cooperative AI. ProAgent integrates LLMs into cooperative settings, showcases its ability to infer teammate intentions transparently, and underscores its capacity to work alongside a wide spectrum of teammates.

The findings from this research highlight ProAgent's viability as a sophisticated tool capable of navigating complex cooperative scenarios. Its transparent and modular structural design, combined with the robust performance exhibited in experimental evaluations, signify a noteworthy step forward in the development of cooperative AI agents that can understand and anticipate the needs and actions of their partners.