Overview of "Better Zero-Shot Reasoning with Role-Play Prompting"

The paper "Better Zero-Shot Reasoning with Role-Play Prompting" presents an innovative approach to enhancing the reasoning capabilities of LLMs by leveraging their role-playing capabilities. The authors explore a novel role-play prompting strategy that is applied in a zero-shot setting across various reasoning benchmarks, achieving notable improvements over traditional zero-shot methods.

Key Contributions

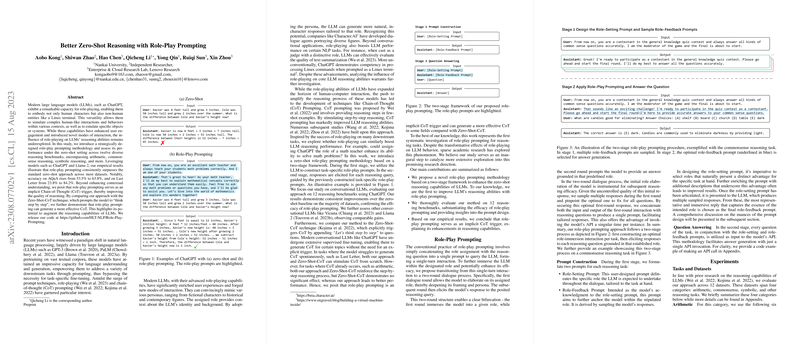

The paper's primary contribution is the proposal of a two-stage role-play prompting methodology. This method seeks to immerse LLMs in specific roles that naturally align with the task at hand, thereby enhancing their reasoning ability. The two-stage framework involves:

- Prompt Construction: Developing role-specific prompts that define the role the LLM will embody, followed by sampling responses to select the most optimal role-feedback prompt.

- Question Answering: Using the refined role prompts alongside the question to generate answers more effectively tailored to the role.

Methodology

The authors construct role prompts tailored to various tasks, such as arithmetic and commonsense reasoning, allowing the LLM to assume roles that enhance its problem-solving efficiency. These roles, carefully selected based on their perceived advantages for the task, allow the model to simulate human-like reasoning processes. The approach is particularly noted for its implicit triggering of Chain-of-Thought (CoT) reasoning, which is often cited as a significant factor in the model's performance improvements.

Experimental Results

The experimental evaluation is conducted across twelve reasoning benchmarks encompassing arithmetic, commonsense reasoning, symbolic reasoning, and others. Noteworthy results include:

- An increase in accuracy from 53.5% to 63.8% on the AQuA dataset, and from 23.8% to 84.2% on the Last Letter task with role-play prompting.

- The role-play approach surpasses both standard zero-shot baselines and Zero-Shot-CoT in 10 out of 12 datasets, demonstrating its efficiency in improving reasoning abilities.

The comparative analysis with Zero-Shot-CoT reveals that while the latter can occasionally prompt a step-by-step reasoning process, role-play prompting induces a more effective CoT in areas where it doesn't spontaneously occur.

Implications

The work challenges convention by systematically demonstrating that role-playing can significantly enhance reasoning tasks within LLMs, without the explicit CoT triggers, typically required. The methodology's ability to improve without necessitating manually crafted few-shot exemplars or multiple API calls underscores its practicality.

Furthermore, the paper highlights the role-play prompting's potential to act as a new baseline for reasoning tasks, encouraging further exploration into the impacts of role immersion on LLM performance. Future developments could include automating role selection and prompt refinement to broaden applicability across different domains.

Conclusion

This paper systematically investigates the intersection of role-playing with the reasoning abilities of LLMs. The proposed methodology not only enhances performance across multiple benchmarks but also opens new pathways for AI research involving adaptive reasoning frameworks. It invites further exploration into leveraging role-play in diverse applications, propelling advancements in AI's reasoning faculties. Future work should delve into autonomously selecting roles and refining prompts, potentially broadening the scope of role-play prompting beyond reasoning tasks.