Overview of "OctoPack: Instruction Tuning Code LLMs"

The paper "OctoPack: Instruction Tuning Code LLMs" presents a novel approach to improving the performance of LLMs in the domain of code generation and interpretation. The authors focus on the technique of instruction tuning, leveraging code commits as a unique source of training data.

Key Contributions

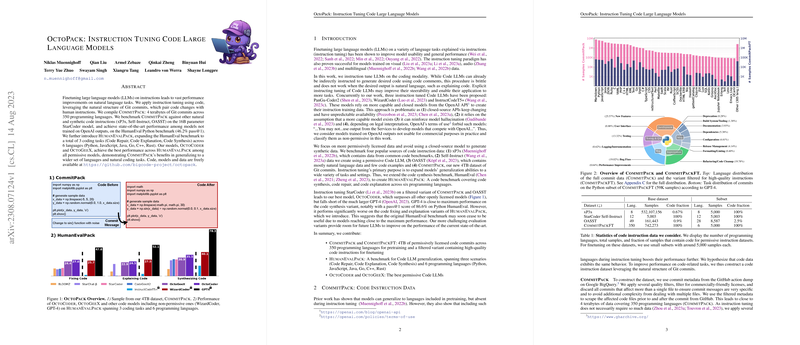

The central contribution of this work is the creation of "CommitPack," a comprehensive dataset comprising 4 terabytes of Git commits across 350 programming languages. This dataset is curated to enhance the capability of LLMs in understanding and generating code by using the intrinsic pairing of code changes with human-authored commit messages. By instruction tuning the 16B parameter StarCoder model with this dataset, the paper demonstrates significant performance improvements across various evaluation benchmarks.

Evaluation and Benchmarks

The authors introduce "HumanEvalPack," an expanded version of the HumanEval benchmark, to test the generalization abilities of instruction-tuned models across three coding tasks: Code Synthesis, Code Repair, and Code Explanation. HumanEvalPack encompasses six programming languages, providing a robust framework for assessing the versatility of code models.

OctoCoder and OctoGeeX, the models trained on CommitPack and evaluated against HumanEvalPack, outperform other open, non-OpenAI models, achieving a pass@$1$ score of 46.2% on the HumanEval Python benchmark. This result positions these models as the top performers in code generation among models trained without access to OpenAI's outputs.

Detailed Analysis

The dataset preparation involves meticulous filtering to ensure high quality. The paper describes CommitPackFT, a filtered version of the original dataset, containing only high-quality commitment instructions, which is pivotal in improving model performance in code-related tasks.

Instruction tuning with code data benefits from explicit pairing with natural language, enhancing capabilities in both code generation and natural responses. The paper shows that mixing code and natural language data during instruction tuning is crucial for achieving balanced performance across different programming tasks.

Theoretical and Practical Implications

Technically, the paper extends the paradigm of instruction tuning to the domain of code generation, showcasing that structured data like Git commits can provide substantial learning signals for LLMs. Practically, these findings suggest potential applications in software development, where automated code synthesis, repair, and explanation can be deployed to improve productivity and accuracy.

Future Directions

The work opens avenues for further exploration in instruction tuning, particularly in exploring alternative data sources and refining data filtering techniques. Future research might involve integrating multiple coding languages and exploring more complex code tasks, considering the rapidly evolving landscape of LLM capabilities.

While OctoCoder and OctoGeeX demonstrate promising results, further research is needed to surpass the performance of closed-source models like GPT-4. Enhancing base model capabilities through larger-scale pretraining and integrating multi-language support remain challenging yet promising directions.

Conclusion

Overall, the paper presents a significant advancement in leveraging code commits for instruction tuning LLMs. By introducing robust datasets and benchmarks, the authors provide a valuable resource for the AI community and set a precedent for future work in the intersection of code and natural language processing.