An Expert Survey on Multimodal LLMs

The paper "A Survey on Multimodal LLMs" authored by Shukang Yin et al., offers a comprehensive review of the recent advancements and methodologies involved in the development of Multimodal LLMs (MLLM). These models leverage LLMs as a core reasoning entity to handle multimodal tasks that incorporate both vision and language. This survey explores the emergent capabilities, underlying methodologies, key challenges, and promising directions in MLLM research.

Overview of MLLM Capabilities

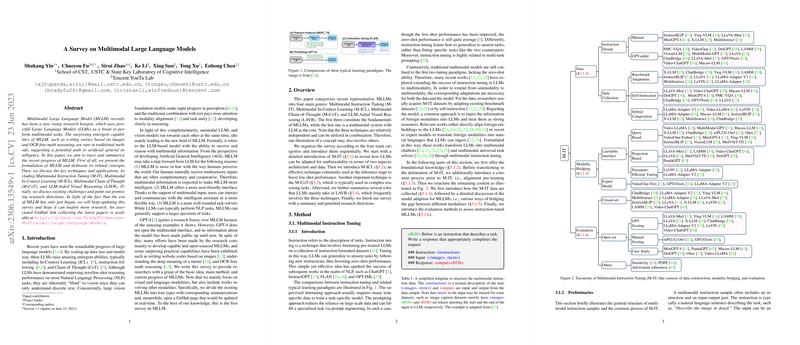

MLLMs mark a significant departure from traditional unimodal models by incorporating multimodal inputs for more complex tasks. The authors classify MLLM advancements into four broad techniques: Multimodal Instruction Tuning (M-IT), Multimodal In-Context Learning (M-ICL), Multimodal Chain of Thought (M-CoT), and LLM-Aided Visual Reasoning (LAVR).

Multimodal Instruction Tuning (M-IT)

M-IT extends the concept of instruction tuning, initially validated in text-based LLMs, to the multimodal field. Techniques like In-Context Learning (ICL) and multimodal chain-of-thought (CoT) reasoning have shown effectiveness in adapting LLMs to new tasks without extensive retraining. M-IT necessitates both architectural and data adaptations:

- Data Collection: The survey details various approaches to constructing multimodal instruction datasets. These approaches include benchmark adaptation, self-instruction, and hybrid composition of multimodal and language-only data.

- Modality Bridging: Connecting visual inputs with LLMs involves learnable interfaces or expert models. A common method is to use projection-based or query-based techniques to integrate visual features into the LLM’s reasoning process.

- Evaluation: Evaluating MLLMs requires both closed-set and open-set methodologies. Closed-set evaluation uses predefined datasets, while open-set evaluation involves manual or GPT-based scoring to assess the flexibility and generalization of the model in new or unseen tasks.

Multimodal In-Context Learning (M-ICL)

Building on the emergent abilities of LLMs in ICL, M-ICL allows multimodal models to generalize from a few examples provided in the context of new queries. This is especially practical for visual reasoning tasks and tool usage scenarios where generating step-by-step reasoning or action plans is crucial.

Multimodal Chain of Thought (M-CoT)

M-CoT adapts the CoT mechanism to the multimodal context, enabling LLMs to generate intermediate reasoning steps. This not only aids in better task performance but also enhances model interpretability. Key aspects of M-CoT include:

- Modality Bridging: Similar to M-IT, modality bridging in M-CoT either involves direct integration of visual features or the use of expert models for generating descriptive text from visual inputs.

- Learning Paradigms: Methods like finetuning and zero/few-shot learning are employed. Finetuning involves specific datasets designed for CoT learning, while zero/few-shot setups use predefined or adaptive chain configurations.

- Chain Configuration: Chains can be either pre-defined or adaptively determined during reasoning, impacting the model's ability to handle complex problems flexibly.

LLM-Aided Visual Reasoning (LAVR)

In LAVR, LLMs enhance visual reasoning tasks by acting as controllers, decision-makers, or semantics refiners. Various training paradigms are employed:

- Training Paradigms: Most LAVR systems operate in a training-free manner, leveraging pre-trained models for zero/few-shot learning. A notable exception is GPT4Tools, which uses finetuning with an M-IT dataset.

- Functions:

- Controller: LLMs decompose complex tasks into simpler sub-tasks, assigning them to appropriate tools or modules.

- Decision Maker: In multi-round setups, LLMs continuously evaluate and refine responses based on iterative feedback.

- Semantics Refiner: LLMs utilize their linguistic knowledge to generate or refine textual information.

- Evaluation: Performance is assessed using benchmark metrics or manual evaluation, depending on the task's nature and the model's capabilities.

Challenges and Future Directions

The paper emphasizes that MLLM research is nascent and identifies several key challenges:

- The perception capabilities of MLLMs are still limited by the information bottlenecks in modalities integration.

- The robustness of reasoning chains in MLLMs requires more investigation, especially under complex querying conditions.

- Enhancing instruction following and alleviating object hallucination are crucial for practical reliability.

- Novel, parameter-efficient training techniques are necessary to harness the full potential of MLLMs.

Conclusion

The authors provide an exhaustive review of MLLM advancements, methodologies, challenges, and potential research directions. The survey is valuable for researchers aiming to leverage multimodal data and approaches to improve AI's reasoning and perception capabilities. The integration of M-IT, M-ICL, M-CoT, and LAVR strategies holds promise for the next generation of intelligent systems capable of more nuanced and complex task handling. This work is a critical resource, offering a structured perspective on current progress and future opportunities in the burgeoning field of MLLMs.