Insights on RETA-LLM: A Retrieval-Augmented LLM Toolkit

The paper "RETA-LLM: A Retrieval-Augmented LLM Toolkit" presents a well-structured methodology and tool for enhancing the factual reliability of LLMs through integration with Information Retrieval (IR) systems. This integration actively addresses one of the core limitations of LLMs—hallucination, or the generation of plausible-sounding yet incorrect information. The research underlines the importance of complementing LLMs with external knowledge bases to improve their ability to produce accurate and domain-specific responses.

Core Contributions and Methodology

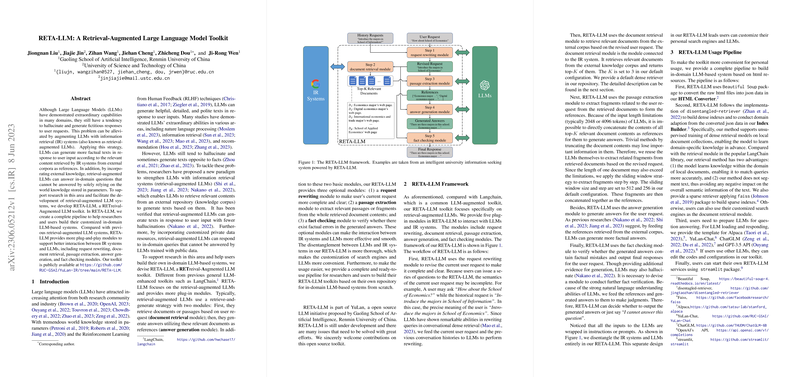

The paper introduces RETA-LLM, a pioneering toolkit aimed at fostering the development of retrieval-augmented LLM systems. RETA-LLM is structured around a modular pipeline capable of supporting customized in-domain LLM applications. Significantly, the toolkit extends the capabilities beyond existing frameworks by providing a more comprehensive suite of plug-and-play modules.

The toolkit’s modular architecture offers:

- Request Rewriting: Enhances user queries to make them contextually complete.

- Document Retrieval: Leverages the function of IR systems to fetch relevant documents from external corpora.

- Passage Extraction: Identifies and isolates pertinent fragments from retrieved documents, catering to input limits of LLMs.

- Answer Generation: Utilizes selected passages to create factual responses.

- Fact Checking: Employs LLMs in verifying the accuracy of generated content against referenced documents.

The delineation between LLM and IR systems in RETA-LLM is clear, allowing users to tailor search engines and LLMs according to their domain-specific needs. This flexibility marks a departure from more rigid mechanisms and facilitates bespoke system development.

Implications and Future Prospects

The implications of this research are multifaceted, impacting both applied and theoretical domains. Practically, RETA-LLM provides a structured approach to mitigating LLM limitations with respect to data veracity, thus enhancing the usability of LLMs across specialized domains such as technical support, legal advisory, and education. Theoretically, the toolkit contributes valuable insights into the dynamics of combining IR and LLMs, positing a more effective framework to explore cognitive-like retrieval and synthesis processes within AI systems.

The prospects for further research and development include the integration of more sophisticated retrieval mechanisms, such as active retrieval augmented generation, to elevate the interactivity and adaptability of these systems. Moreover, recurring enhancements toward modernization and configurability will be instrumental in sustaining the toolkit’s relevance amid rapidly advancing IR and LLM technologies.

Conclusion

RETA-LLM stands out as a significant contribution to the ongoing efforts in retrieval-augmented generation frameworks. By offering modular, customizable components tailored for domain-specific applications, it facilitates more accurate and reliable LLM outputs while empowering researchers and practitioners to navigate and mitigate the limitations of LLMs. This paper posits RETA-LLM not only as a robust research artifact but as a practical solution advancing the integration of large-scale machine learning models with dynamic IR capabilities.