Activation-aware Weight Quantization: Enhancing LLM Compression and Deployment Efficiency

Overview of Activation-aware Weight Quantization (AWQ) Approach

Activation-aware Weight Quantization (AWQ) represents a significant advancement in the quantization of LLMs, which are pivotal in natural language understanding and generation tasks. The core innovation of AWQ lies in its method for low-bit, weight-only quantization that strategically scales certain weights based on activation rather than weight values themselves. This approach is grounded in the understanding that a small subset of weights, when appropriately scaled, can significantly mitigate the negative impacts of quantization on model performance.

Key Findings and Methodological Insights

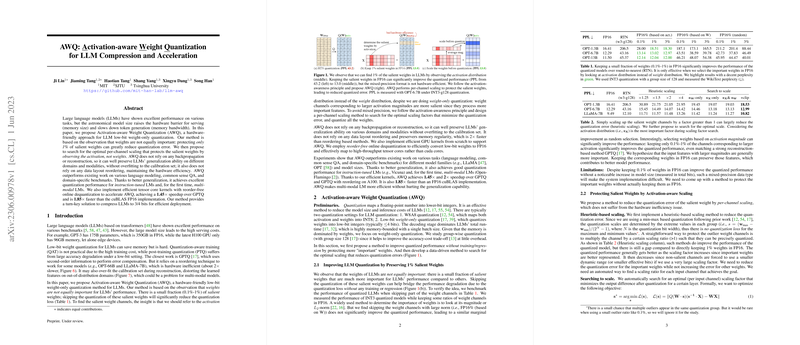

- AWQ is premised on the differentiation of weights in terms of their impact on model performance, identifying that protecting as little as 0.1% to 1% of salient weights can substantially reduce quantization errors.

- The method introduces a novel technique of per-channel scaling to minimize these errors without the need for mixed-precision formats, which are less hardware-efficient.

- Unlike prior methods that may depend on backpropagation or reconstruction, AWQ requires no such processes, thus preserving generalization across diverse domains and modalities without overfitting to the calibration set.

Comparative Performance and Results

- Empirical evaluations demonstrate AWQ's superior performance over existing quantization approaches across a variety of benchmarks, including domain-specific tasks and benchmarks for LLMing.

- AWQ consistently achieves significant speedups, over 3× compared to FP16 implementations by Huggingface on various LLMs, and facilitates running larger models on hardware with limited memory capacity.

- The method’s strengths extend to instruction-tuned LMs and, notably, to multi-modal LMs, marking a pioneering advancement in the quantization of such LLMs.

Implications and Future Directions

The introduction and implementation of AWQ have multiple implications for the field of AI and LLM research:

- It addresses a critical challenge in the deployment of LLMs, making them more accessible and efficient for real-world applications, especially on edge devices.

- The method’s hardware efficiency and broad applicability suggest a wider adoption in serving solutions and platforms, potentially becoming a standard approach in LLM quantization.

- Future research may explore further optimizations in scaling techniques and extend the approach to a wider array of model architectures and tasks.

System Implementation and Deployment

An efficient and flexible serving framework accompanies the AWQ approach, translating theoretical benefits into practical performance improvements. This framework leverages kernel fusion and other optimization techniques to realize speed gains in LLM inference tasks. The successful deployment of LLMs, such as the 70B parameter Llama-2 model on constrained devices like the NVIDIA Jetson Orin, exemplifies the real-world applicability and benefits of AWQ.

Conclusion

Activation-aware Weight Quantization (AWQ) represents a significant leap forward in the efficient and effective quantization of LLMs. Through a meticulous focus on the saliency of weights and innovative per-channel scaling, AWQ not only preserves but enhances model performance post-quantization. Its application across a variety of LLMs and tasks, coupled with significant speed and efficiency gains, positions AWQ as a pivotal advancement in the broader endeavor to make powerful AI models more accessible and practical for a wide range of applications.