- The paper introduces Code Prompting, a neural symbolic method that uses Python code as an intermediate reasoning step to enhance LLM problem-solving.

- It employs a two-stage process of code generation and execution, reducing ambiguity and outperforming traditional Chain-of-Thought prompting.

- The method improves arithmetic and symbolic reasoning tasks and integrates self-debugging and ensemble techniques for enhanced accuracy.

Code Prompting for Complex Reasoning in LLMs

Code Prompting is a neural symbolic method developed to enhance complex reasoning capabilities in LLMs. This approach integrates code generation prompts to serve as intermediate reasoning steps instead of traditional natural language prompts, aiming to mitigate the limitations observed in previous strategies.

Introduction to Code Prompting

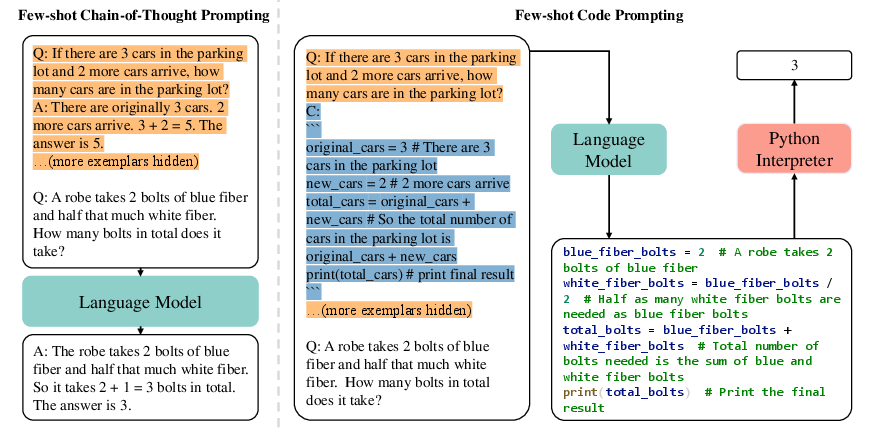

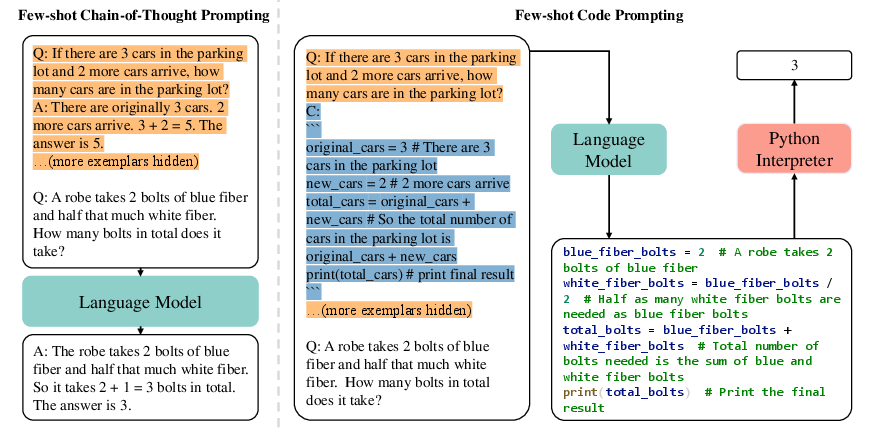

The paper presents Code Prompting as an efficient method that leverages structured symbolic representations to improve LLM reasoning. Unlike Chain-of-Thought (CoT) prompting, which involves creating natural language rationales, Code Prompting employs programmatic code snippets that LLMs can interpret and execute.

Figure 1: The pipelines of zero-shot CoT prompting and zero-shot code prompting.

Code Prompting unfolds in two distinct stages:

- Code Generation: The LLM generates Python code based on the task description.

- Solution Execution: The generated code is either interpreted directly by the LLM for reasoning or executed externally via a Python interpreter.

Symbolic Reasoning with Code Prompting

The study evaluated Code Prompting across symbolic reasoning tasks such as last letter concatenation and coin flipping, demonstrating substantial improvements over CoT prompting methods. The structured nature of code enables precise task decomposition and eliminates ambiguity, which often burdens natural language prompts.

Advantages:

Arithmetic Reasoning and Code Prompting

For arithmetic reasoning, Code Prompting was applied to various datasets, including SingleEq, AddSub, MultiArith, SVAMP, and GSM8K. The approach demonstrated competitive accuracy with few-shot methods while offering benefits in zero-shot scenarios.

Error Analysis:

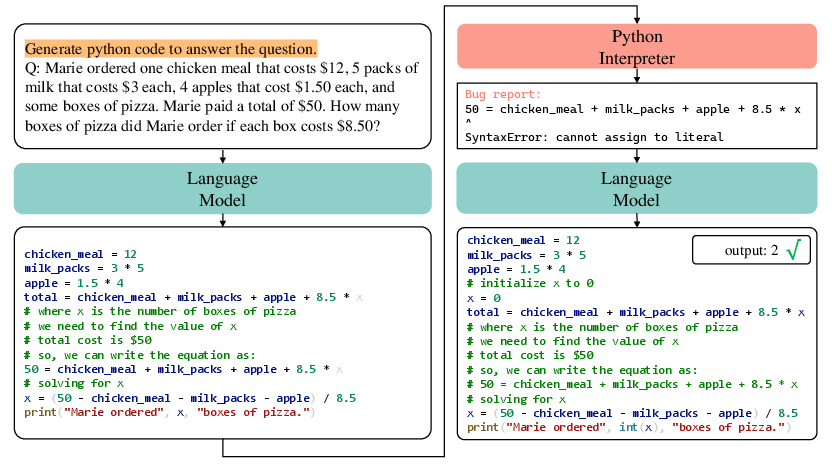

Experiments highlighted areas where Code Prompting excels, such as tasks involving straightforward calculations. Conversely, challenges emerged with complex equation solving, as evidenced by errors in datasets like GSM8K, necessitating additional instruction integration for sympy usage.

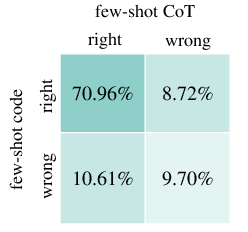

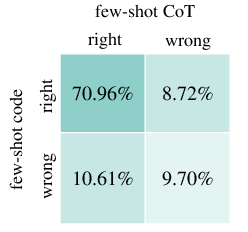

Figure 3: Error distribution of few-shot code prompting and few-shot CoT prompting regarding dataset GSM8K.

Augmentation Techniques

Various augmentation strategies were developed:

- Self-Debugging: A technique where generated code is checked for errors, with the LLM prompted to debug and correct.

- Irrelevant Information (irr) Handling: Instructions to disregard non-essential data within problem statements.

- Equation Instruction (equ): Guidance on integrating specialized packages for complex mathematical operations.

Ensemble Technique

The paper explored combining CoT and Code Prompting through ensemble methods. This combination achieved higher accuracy by leveraging the complementary strengths of both approaches.

Results:

- Ensemble Voting: Improved performance over individual methods by addressing different facets of the reasoning process.

Conclusion

Code Prompting offers a robust framework for enhancing LLM reasoning capabilities through structured code-based prompts. Its systematic approach to task decomposition, along with error analysis and augmentation strategies, paves the way for more accurate and efficient LLM-driven problem-solving. Future work could expand on integrating additional symbolic languages and exploring cross-domain applicability.

This technical exploration underscores the pivotal role of code-based strategies in propelling advancements in AI reasoning methodologies. Overall, Code Prompting highlights a promising shift toward neural-symbolic integration in LLM prompt engineering.