Better Zero-Shot Reasoning with Self-Adaptive Prompting

The paper "Better Zero-Shot Reasoning with Self-Adaptive Prompting" by Xingchen Wan et al. explores the enhancement of zero-shot reasoning capabilities of LLMs using a novel approach termed Self-Adaptive Prompting (COSP). The focus is on addressing the limitations inherent in zero-shot and few-shot reasoning setups and proposing a systematic methodology to improve performance without the reliance on ground-truth labels or extensive human annotation.

Key Issues in Current Approaches

The advent of large-scale LLMs has significantly advanced the state-of-the-art in NLP tasks. With techniques such as chain-of-thought (CoT) prompting, LLMs have demonstrated strong performance in tasks requiring step-by-step reasoning. However, the existing methods have conspicuous limitations:

- Few-shot CoT, where models are prompted with examples, is highly sensitive to the choice of these examples. The example selection process is labor-intensive and requires domain-specific expertise.

- Zero-shot CoT alleviates the need for labeled examples by using trigger phrases to elicit reasoning. However, it often underperforms few-shot methods due to the lack of tailored guidance for diverse tasks.

Proposed Method: COSP

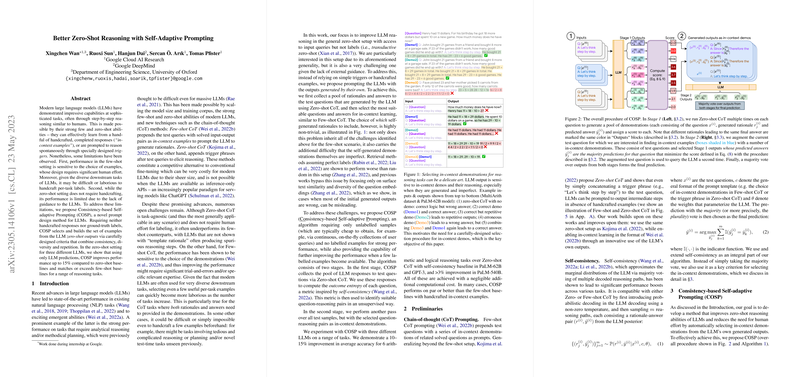

COSP addresses these limitations by employing a two-stage algorithm that automatically selects high-quality in-context examples from the model's own zero-shot outputs:

- Stage 1 (Candidate Generation): This involves generating multiple reasoning paths for each test query via Zero-shot CoT and collecting a pool of candidate demonstrations. The outcomes are evaluated based on their consistency (using entropy as a proxy for model confidence) and the diversity of reasoning and responses.

- Stage 2 (Demonstration Utilization): The selected candidate examples from the first stage are used as in-context demonstrations for the LLM. The model is queried again, incorporating these demonstrations to improve the reasoning process.

Novel Contributions

- The use of outcome entropy to score and select candidate demonstrations is critical to COSP. This metric assesses the reliability of self-generated answers by the model, fostering the selection of confident and consistent responses.

- A penalty for repetitiveness ensures the diversity of examples, enhancing the robustness of the in-context learning process.

- COSP leverages self-consistency to automatically curate effective demonstrations without human intervention or reliance on labeled data, thus significantly reducing the cost and effort involved in model guidance.

Empirical Results

The paper validates COSP across three LLMs (PaLM-62B, PaLM-540B, and GPT-3) and a variety of logical and arithmetic reasoning tasks (e.g., MultiArith, GSM-8K, CSQA). The results demonstrate that:

- COSP improves zero-shot performance by up to 15% compared to baseline methods.

- Performance parity is achieved or exceeded relative to few-shot baselines using manually selected examples, highlighting the efficacy of the method.

- The method is particularly advantageous for smaller models (e.g., PaLM-62B), where it significantly reduces the performance gap with larger, more resource-intensive models (e.g., PaLM-540B).

The paper provides an extensive comparison with other adaptive zero-shot techniques like Auto-CoT, showing that COSP's sophisticated selection criteria yield more reliable and higher-quality demonstrations, leading to consistent performance improvements.

Implications and Future Directions

COSP's ability to enhance zero-shot reasoning has implications both practically and theoretically:

- Practical Implications: COSP reduces the dependency on human-crafted examples and annotations, making it feasible to leverage LLMs for a wider range of tasks in a cost-effective manner. This approach democratizes access to advanced reasoning capabilities by enabling the use of smaller models efficiently.

- Theoretical Implications: The method underscores the importance of model introspection—using models' own uncertainty measures to guide their learning processes. This approach paves the way for future research in self-aware and adaptive AI systems.

Speculations on Future Developments

Looking forward, the principles underpinning COSP could be extended to other types of NLP tasks beyond logical and arithmetic reasoning. Additionally, combining COSP with continual learning paradigms where models dynamically adapt to new data during deployment could further enhance zero-shot learning capabilities. Moreover, extending COSP to interact with external tools and datasets might improve its application scope, making it more versatile in real-world scenarios.

In conclusion, COSP presents a compelling step forward in zero-shot reasoning for LLMs, effectively balancing the trade-off between model performance and the need for human supervision. The two-stage, self-adaptive framework detailed in this paper offers a robust, scalable, and efficient method to harness the full potential of LLMs in reasoning tasks.