Deepfake Text Detection in the Wild: A Comprehensive Evaluation

The discussed paper, "Deepfake Text Detection in the Wild," tackles the critical problem of identifying machine-generated text, which has become increasingly challenging due to the sophistication of LLMs. This complex task, prominent in domains such as fake news and plagiarism, is meticulously examined by creating a diverse and comprehensive benchmark. The authors construct an extensively annotated dataset aimed at evaluating deepfake text detection across varying contexts and LLMs, thus contributing significantly to the discourse on artificial text detection.

Study Setup and Dataset Construction

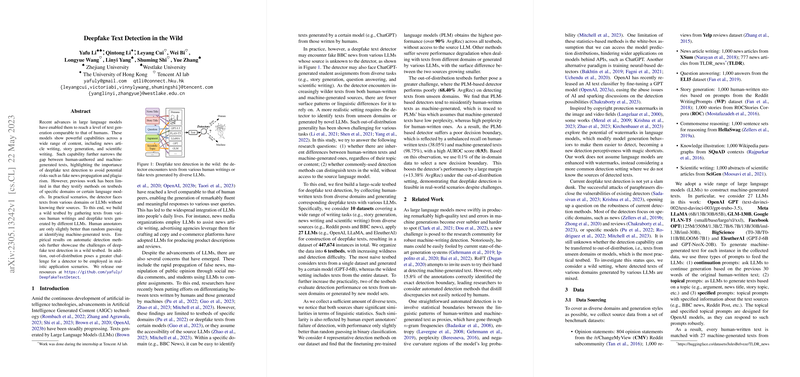

The researchers effectively bridge the gap between domain-specific deepfake text detection and the more nuanced real-world scenario where texts of varying sources and natures coexist. They compile a large-scale testbed consisting of both human-written and machine-generated texts. The dataset involves over 447,000 instances generated across ten diverse writing tasks, employing texts derived from platforms like Reddit, BBC, and scientific literature. The LLMs used encompass notable ones like OpenAI's GPT variants, Meta's LLaMA, and several others from Google and EleutherAI, thereby ensuring an extensive coverage of generation styles and model architectures.

This dataset is organized into multiple testbeds that incrementally raise the bar of detection difficulty. Testing ranges from domain-specific scenarios to cross-domain and cross-model challenges, and even extends to evaluating out-of-distribution generalities. Such an arrangement not only benchmarks the existing models but also sets a precedent for future evaluation in this area.

Detection Methods and Evaluation Metrics

Four detection strategies are examined: fine-tuning pre-trained models (Longformer), feature-based classifiers like GLTR and FastText, and zero-shot approaches such as DetectGPT. Notably, the Longformer detector, which exploits the capabilities of pre-trained LLMs with fine-tuning, consistently demonstrates superior performance. Evaluation metrics like AvgRec and AUROC are effectively utilized to gauge performance, offering insights into detection efficiencies in varying contextual landscapes.

Key Findings and Implications

Unsurprisingly, human annotators and models like ChatGPT struggle to identify machine-generated texts reliably, reflecting the sophisticated nature of outputs from modern LLMs. A significant finding is the approximation of linguistic structure between human and machine texts, evidenced by failed human detection and statistical analysis, suggesting a minimized divergence. The Longformer detector, despite the increasingly intricate testbeds, maintains a notable performance level, indicating the utility of pre-trained models for such tasks.

The out-of-distribution detection experiments further the conversation on generalization. The paper exposes inherent biases in PLM-based detectors' reliance on perplexity as a determinative factor, underscoring the importance of decision boundary optimization for improved detection accuracy.

Theoretical and Practical Implications

The nuances identified in sentiment, grammatical correctness, and moderation suggest potential vectors for future research. The negligible differences in sentiment polarity, alongside machine-generated text often having higher formality, highlight characteristics that could guide improved detector training and fine-tuning strategies.

This work paves the path for future research in developing robust, generalizable models capable of discerning artificial text amidst diverse and evolving linguistic landscapes. It reinforces the necessity for continuous advancement in detection methodologies, underscoring the practical implications for media platforms, educational institutions, and broader societal contexts where deepfake texts might impact authenticity and integrity.

In summary, the paper offers a comprehensive evaluation of deepfake text detection, providing a valuable resource and benchmark for ongoing research in machine-generated text identification. As LLMs continue to evolve, these findings and methodologies will be paramount in mitigating risks associated with artificially generated content.