Improving Autoregressive Image Generation with Dynamic Vector Quantization

The paper "Towards Accurate Image Coding: Improved Autoregressive Image Generation with Dynamic Vector Quantization" presents an innovative approach to enhance image generation using vector quantization (VQ) within autoregressive models. The authors address limitations of existing VQ-based methods and propose a novel two-stage framework that adapts the granularity of encoded image regions dynamically, leveraging their different information densities.

Background and Motivation

Recent advances have significantly improved the quality of image generation, prominently through deep generative models. VQ has been a widely adopted technique due to its effectiveness in representing images as discrete codes within generative models like DALL-E and latent diffusion models. Typically, these models follow a fixed-length coding approach, encoding images into discrete codes of uniform length. However, this method does not account for the inherent variance in information density across different regions of an image. As a consequence, important regions with dense information may be inadequately represented, while sparse regions often encounter excessive redundancy. This discrepancy detracts from both the generation quality and speed.

The authors propose an alternative approach to encoding image information, advocating for a variable-length representation based on information density, akin to classical information theory and adaptive coding principles. Their method also aims to depart from the conventional raster-scan order of generation, proposing a more natural coarse-to-fine strategy for autoregression, which they hypothesize would better align with how visual content is expected to be generated.

Methodology

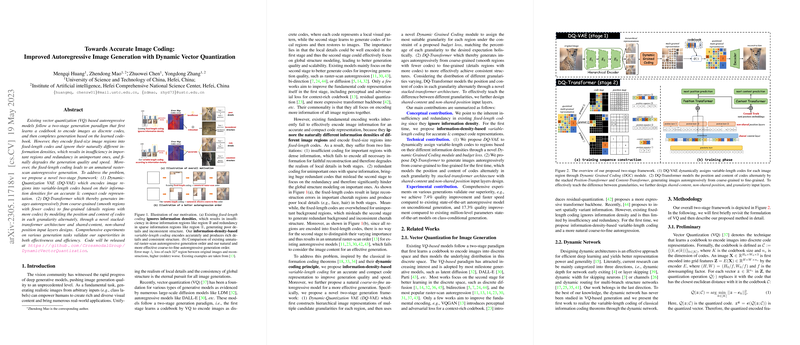

The proposed framework, encapsulated in Dynamic-Quantization VAE (DQ-VAE) and DQ-Transformer, operates in two stages:

- DQ-VAE: This component employs a variable-length coding strategy that dynamically assigns codes to image regions based on their information density. It utilizes a hierarchical image representation and a Dynamic Grained Coding module to ensure each region is represented by an appropriate quantity of code granularity. A valuable addition is the budget loss, which aligns the code allocation with holistic granularity expectations.

- DQ-Transformer: This novel architecture facilitates the coarse-to-fine generation process. It consists of two main modules, the Position-Transformer and Content-Transformer, which predict the next code position and content, respectively. Diverse input layers assist in distinguishing between different code granularities efficiently, while the autoregressive model synthesizes images following a logical sequence from less to more complex structures.

Experiments and Findings

The paper demonstrates substantial performance improvements over prior models through extensive experiments on FFHQ and ImageNet datasets. The authors highlight a marked reduction in Fréchet Inception Distance (FID) – a standard metric for assessing image generation quality. Specifically:

- On unconditional FFHQ generation, the proposed model achieves an FID of 4.91, outperforming previous methods' results.

- For class-conditional generation on ImageNet, the method achieves notable gains in both FID and inception score (IS) metrics, proving the model's efficacy in multiple image generation contexts.

The comparison with other models, such as VQGAN, shows that the dynamic assignment of codes significantly improves the reconstruction efficiency and quality. The experiments reveal a favorable trade-off between code length and quality, underscoring the advantages of the adaptive approach in reducing redundancy and enhancing detail representation.

Implications and Future Directions

This research outlines a significant advancement towards efficient and high-quality autoregressive image generation. The adoption of variable-length coding has potential benefits beyond autoregression, particularly in models utilizing discrete diffusion and bidirectional strategies. The demonstrated improvements in speed and quality open up opportunities for applications needing diverse and large-scale image generation or reconstruction tasks.

The future endeavors in this line of research could explore extending the principles of dynamic coding to other domains within generative models, evaluating the framework's adaptability, and uncovering further efficiencies that may enhance subsequent generations of image models. The cross-disciplinary application of adaptive coding strategies also holds potential for advancing pretraining methods in vision transformers and other related fields.