Disentangling Task Recognition and Task Learning in LLMs

Introduction to In-Context Learning in LLMs

In-context learning (ICL) equips LLMs with the capability to adapt to new tasks by presenting a few example pairs from the task within the prompt. This paper, led by scholars from Princeton University, addresses the mechanisms by which LLMs perform ICL, specifically distinguishing between task recognition (TR) and task learning (TL). The distinction lies in TR's ability to identify tasks utilizing pre-trained priors without explicit label guidance, and TL's competency in learning new input-label mappings from demonstrations.

Experimental Approach and Findings

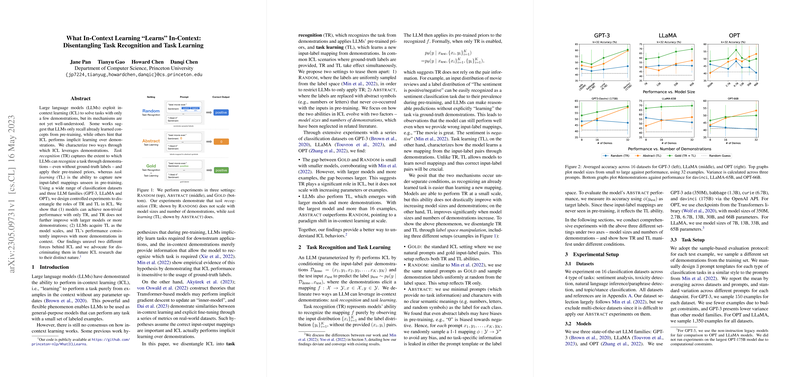

The team's methodology involved designing controlled experiments that manipulate the label space across classification datasets and comparing models from three LLM families: GPT-3, LLaMA, and OPT. They employed settings that isolated TR (by providing random labels) and TL (through abstract, semantically void labels), enabling the analysis of how these mechanisms evolve with changes in model size and demonstration numbers.

The results reveal a nuanced landscape where TR contributes significantly to LLM performance across various scales but does not benefit from increased model size or additional demonstrations. Conversely, TL emerges distinctly with larger models and larger demonstration sets, suggesting a fundamental shift in ICL dynamics at scale. Here, larger models with more examples significantly outperform their counterparts in abstract label settings, showcasing their ability to engage in actual task learning beyond mere recognition.

Theoretical and Practical Implications

This exploration into ICL's underlying mechanisms elucidates the dual nature of how LLMs adapt to new tasks. On one side, TR leverages the extensive pre-training to readily recognize and apply known patterns, evidencing LLMs' remarkable ability to utilize prior knowledge. On the other, the emergence of TL at larger scales highlights the capacity of these models to integrate new information, pushing the boundaries of what LLMs can achieve beyond pre-training confines.

Practically, this distinction informs the design and deployment of LLMs for specific tasks: while smaller models may suffice for tasks closely aligned with pre-trained capabilities, leveraging LLMs for novel tasks (requiring genuine learning from examples) necessitates larger models and richer demonstrations.

Forward Look

The findings advocate for a nuanced interpretation of ICL, proposing a model- and demonstration-aware approach to harnessing LLMs' full potential. Future research avenues could explore deeper into the threshold at which TL becomes prominent, the role of domain specificity in TL and TR efficacy, and extending the analysis beyond classification tasks to a broader range of applications. This paper, therefore, not only charts a course for understanding the complexities inherent in ICL but also underscores the multifaceted nature of LLMs' learning capabilities, suggesting a roadmap for tailored, efficient, and effective utilization of these powerful models in diverse settings.