C-Eval: Assessing Foundation Models in a Chinese Context

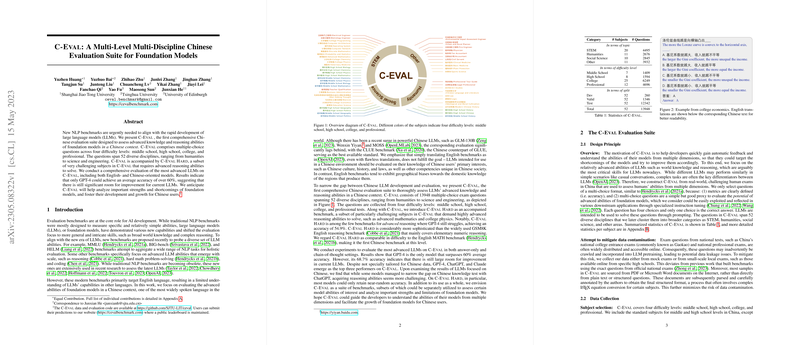

The paper presents the first comprehensive Chinese evaluation suite for LLMs, known as C-Eval. Developed in response to the rapid evolution and growing capabilities of LLMs, C-Eval is designed to evaluate these models within a Chinese context, focusing on their advanced knowledge and reasoning skills. The suite encompasses a diverse range of disciplines, structured across four educational levels: middle school, high school, college, and professional. This evaluation framework includes both C-Eval and a particularly challenging subset, C-Eval Hard, to rigorously test the limits of advanced reasoning abilities.

Benchmark Composition and Creation

C-Eval is notable for its breadth, consisting of 13,948 multiple-choice questions sourced from a wide array of domains, including humanities, science, engineering, and more. The benchmark aims to reflect the real-world complexity and depth of Chinese culture and society, which is not adequately captured by simply translating existing English benchmarks. Hence, C-Eval emphasizes the evaluation of LLMs' performance on topics uniquely pertinent to Chinese users, such as local history, culture, and societal issues.

A critical aspect of the development was the data source selection to mitigate potential data contamination issues. The creators gathered questions from mock exams and local assessments, avoiding publicly available national exam questions that models might have been exposed to during training. This meticulous approach underscores the intention to provide an unbiased evaluation setting that genuinely assesses model competencies beyond prior exposure.

Evaluation of LLMs

The authors evaluated several state-of-the-art LLMs, including GPT-4, ChatGPT, and various Chinese-oriented models. Notably, only GPT-4 surpassed a 60% average accuracy, highlighting the benchmark's difficulty and the potential room for improvement in current models. Interestingly, results showed that while some Chinese-focused models, like GLM-130B, perform competitively in tasks related to Chinese knowledge, there remains a significant performance gap in more general reasoning tasks, exemplified by their inferior performance in STEM disciplines.

Implications and Future Directions

C-Eval's introduction offers several implications for the field of AI and natural language processing. Firstly, it provides a rigorous tool for evaluating the true capabilities of LLMs in non-English languages, an area that has been somewhat overlooked as the emphasis has largely been on English-language performance. Secondly, by highlighting deficiencies across various domains, C-Eval helps researchers and developers better understand where current models fall short and prioritize future improvements.

Looking forward, C-Eval serves as a call to action for the development and refinement of foundation models in diverse linguistic contexts. This benchmark not only aids in identifying the strengths and weaknesses of current LLMs but also encourages a contextual understanding of AI capabilities. Future iterations of such benchmarks may further explore additional languages and dialects or incorporate evaluation metrics beyond accuracy, such as robustness and ethical considerations, thereby broadening the scope and utility of these assessment tools.

The C-Eval initiative embodies a significant step towards inclusive AI development, reflecting a comprehensive understanding of LLMs capable of serving global audiences. This paper sets a foundation for further developing region-specific evaluations, promoting more equitable advancements in AI technologies worldwide.