Enhancing Relation Extraction with GPT-RE: In-context Learning and Task-Aware Demonstrations

Introduction to GPT-RE for Relation Extraction

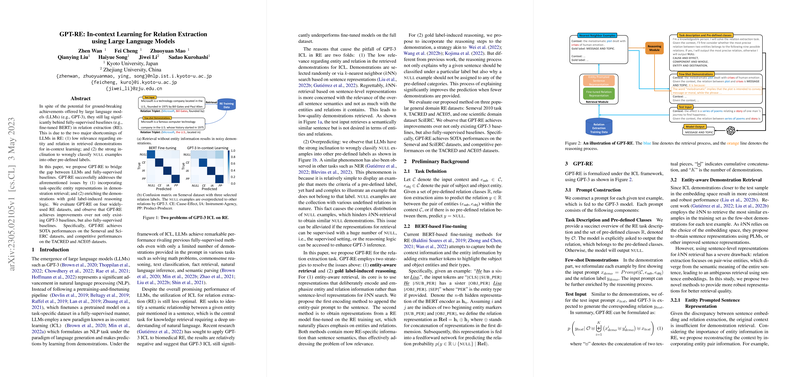

The research presents GPT-RE, a novel approach to addressing the challenges faced by LLMs such as GPT-3 in the task of relation extraction (RE). Despite LLMs' remarkable capabilities in various NLP tasks through in-context learning (ICL), their performance in RE has remained suboptimal, mainly due to issues related to the relevance of entity and relation in demonstration retrieval and the lack of a mechanism for explaining input-label mappings.

Key Innovations in GPT-RE

GPT-RE introduces two significant improvements to overcome the limitations mentioned above:

- Task-Aware Demonstration Retrieval: This method emphasizes the retrieval of demonstrations that are highly relevant to the specific entities and relations of interest, significantly enhancing the pertinence and quality of the demonstrations used for ICL.

- Gold Label-Induced Reasoning: By integrating reasoning logic that aligns with the gold label into the demonstrations, GPT-RE provides a richer context for the model, enabling a deeper understanding and more accurate generation of relation predictions.

Empirical Results

The effectiveness of GPT-RE is demonstrated through extensive evaluations on widely-used RE datasets, including Semeval, SciERC, TACRED, and ACE05. GPT-RE achieves state-of-the-art (SOTA) performances on Semeval and SciERC, and competitive results on TACRED and ACE05, outperforming both existing GPT-3 baselines and fully-supervised approaches.

Theoretical and Practical Implications

The introduction of task-aware demonstration retrieval and gold label-induced reasoning embodies a significant step forward in the application of LLMs like GPT-3 to RE. These strategies not only address the specific shortcomings of ICL in the context of RE but also provide a generalized framework that could influence future research in LLMs' application to other NLP tasks. From a practical standpoint, the ability to enhance LLMs' performance in specialized domains like RE without extensive dataset-specific fine-tuning presents an efficient pathway for developing more versatile and robust NLP systems.

Future Directions

The success of GPT-RE opens several avenues for future research, including exploration into additional mechanisms for improving the alignment between demonstrations and target tasks, and further examination of the limitations of LLMs in tasks requiring deep domain knowledge or complex reasoning. Moreover, the approach of integrating task-specific knowledge and reasoning into ICL could be adapted and extended to other areas beyond RE, potentially unlocking new capabilities in LLMs.

Conclusion

GPT-RE showcases a novel and effective approach to leveraging the strengths of LLMs in the domain of relation extraction, through innovations in demonstration retrieval and the incorporation of reasoning logic. Its successes invite further integration of task-specific knowledge into the in-context learning paradigm, promising advancements in both the theory and practice of generative AI in NLP.