Enhancing Chain-of-Thoughts Prompting with Iterative Bootstrapping in LLMs

The paper "Enhancing Chain-of-Thoughts Prompting with Iterative Bootstrapping in LLMs" by Jiashuo Sun et al., introduces Iterative Chain-of-Thought (Iter-CoT) prompting, a novel approach aimed at improving reasoning in LLMs. This research addresses notable challenges in existing CoT prompting techniques, particularly errors in generated reasoning chains and the selection of exemplars of varying difficulty, which can impede LLM performance on reasoning tasks.

Overview of the Iter-CoT Approach

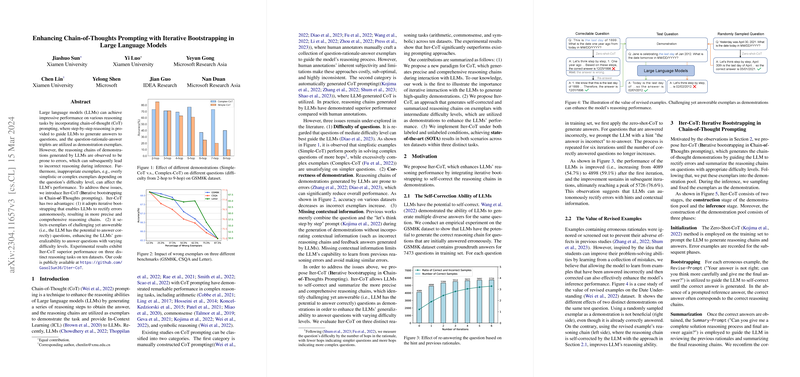

Iter-CoT is designed with two primary enhancements: iterative error correction (bootstrapping) and selection of appropriate exemplars. The bootstrapping aspect enables LLMs to autonomously rectify mistakes in reasoning chains, enhancing accuracy and consistency. This process leverages hints and contextual information from previous errors to guide the model towards better conclusions.

The second enhancement focuses on exemplar selection. Iter-CoT purposely selects questions that are challenging yet answerable, thus improving the generalization capabilities of LLMs across questions of various difficulty levels. This selection process assumes that mid-range difficulty exemplars are most beneficial for training, as overly simplistic or complex ones do not contribute optimally to model learning.

Key Results and Contributions

The experimental results underpin the efficacy of Iter-CoT. Applied to ten datasets spanning arithmetic, commonsense, and symbolic reasoning tasks, Iter-CoT outperformed existing methods. Specifically, the experimental setup demonstrated that Iter-CoT, both with and without label availability, achieved state-of-the-art results. Without relying on annotations or labels, the Iter-CoT(w/o label) variant exhibited competitive performance, highlighting its robustness.

One significant observation was Iter-CoT's superior performance compared to approaches requiring manual annotations. Across multiple reasoning datasets, including GSM8K and Letter Concatenation, Iter-CoT demonstrated notable accuracy improvements, particularly when combined with Self-Consistency (SC) strategies. SC further augmented Iter-CoT by facilitating multiple answer generation, subsequently refined by a majority voting mechanism to ensure selection of the most plausible answer.

Implications and Speculations

The practical implications of Iter-CoT are substantial, especially in domains where accurate reasoning is crucial, such as automated theorem proving, scientific research, and educational tools. By enhancing LLM resilience to errors and refining exemplar selection, Iter-CoT fosters more reliable reasoning capabilities, which could be pivotal in formalizing AI's role in knowledge processing and discovery.

Theoretically, Iter-CoT underscores the importance of iterative learning and contextual adaptation in AI. The approach aligns with the broader AI trend towards self-improvement mechanisms, prompting speculation on future advancements wherein LLMs might leverage iterative refinement to overcome evolving complexities in dynamic reasoning environments.

Conclusion

Overall, the paper contributes valuable insights into CoT prompting techniques, advancing LLM reasoning through systematic error correction and exemplar selection. Iter-CoT symbolizes a forward-thinking strategy, potentially guiding future research towards gradually autonomous improvement methodologies. Continued exploration in this direction could yield further breakthroughs in AI's capability to perform complex reasoning tasks reliably and efficiently.