Analyzing "LaMP: When LLMs Meet Personalization"

The paper "LaMP: When LLMs Meet Personalization" introduces a novel benchmark for evaluating the personalization capabilities of LLMs. This benchmark, termed LaMP, is significant because it focuses on the essential and challenging aspect of personalizing text outputs to meet individual user needs, a feature overlooked by many existing benchmarks focusing on a generalized model performance.

Benchmark Overview

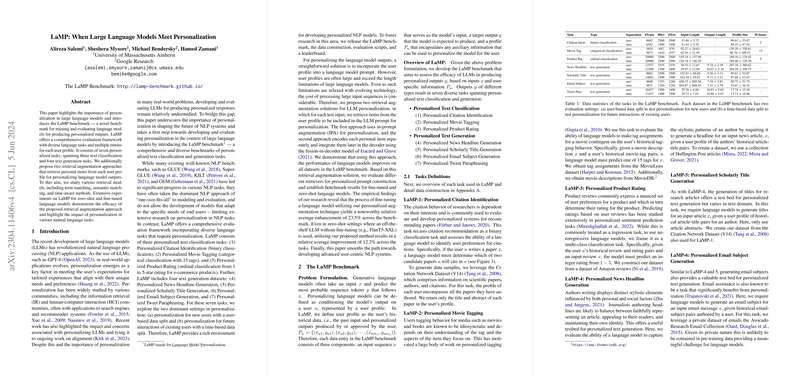

LaMP encompasses seven personalized tasks, split into text classification and text generation categories. These tasks include personalized citation identification, movie tagging, and product rating in the classification domain, and tasks like news headline and scholarly title generation in the text generation domain. These tasks are designed to evaluate how well LLMs can tailor their outputs according to user-specific data or preferences. This is a departure from traditional NLP benchmarks which often don't consider user-specific adaptations and are predominantly generic.

Methodology and Experimentation

The paper describes two prominent methodologies for integrating user profiles with LLMs to achieve personalization: retrieval-based in-prompt augmentation (IPA) and fusion-in-decoder (FiD). These methods were tested against varied retrieval models including term matching (BM25), semantic matching (Contriever), and others focused on recency, highlighting the importance of selecting relevant or recent user data for generating personalized content.

Key numerical results from the paper demonstrate the effectiveness of these personalization strategies. The proposed retrieval augmented techniques significantly improve the baseline results—by 23.5% in fine-tuned settings and 12.2% in zero-shot scenarios across tasks utilizing LaMP datasets. This underscores the potential benefits of effectively integrating user-profile data within LLM prompts, even in a zero-shot context where the model's base capability is integrated without task-specific training.

Implications for Future Research

The paper suggests that personalization is a pivotal advancement for LLMs, moving towards more user-centric NLP applications. The implications of this paper extend to numerous AI applications where personalized user interaction is critical, such as personalized virtual assistants and content recommenders. The results point towards a need for further exploration in designing efficient strategies to generate dynamic personalized prompts and customize retrieval mechanisms beyond the datasets and methods examined.

Future developments in this area might focus on optimizing the retrieval models and investigating alternative methodologies for integrating comprehensive user profiles without overwhelming the model's context window limitations. Additionally, new metrics could be developed to evaluate the quality of personalized text generation effectively, taking user preferences into account in a more nuanced manner than current metrics allow.

Conclusion

The "LaMP" benchmark and accompanying experiments provide a detailed framework for evaluating LLMs' personalization capacities. This work is poised to guide future research and practical implementations in the development of more adaptable and nuanced LLMs that can respond effectively to individual user nuances, steering the next wave of innovation in natural language processing.