InstructUIE: Multi-task Instruction Tuning for Unified Information Extraction

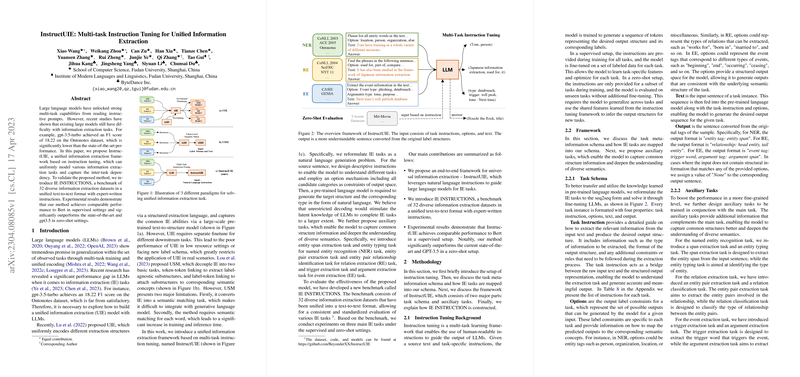

The paper presents InstructUIE, a novel framework that leverages instruction tuning to create a unified information extraction (IE) model. This model aims to address the limitations of LLMs in IE tasks, where models like gpt-3.5-turbo underperform significantly compared to state-of-the-art benchmarks. The proposed InstructUIE framework reformulates IE tasks as a text-to-text problem and integrates auxiliary tasks to enhance its structural understanding.

Methodology

InstructUIE utilizes instruction tuning, a multi-task learning framework, which provides human-readable prompts to guide LLM outputs. The framework involves:

- Task Schema: Each task instance in InstructUIE is composed of detailed task instructions, options (output constraints), input text, and required textual outputs. Tasks are transformed into sequence-to-sequence (seq2seq) formats.

- Auxiliary Tasks: Complementary tasks such as entity span extraction and entity typing for NER, and entity pair extraction for RE, improve the model’s understanding of task-specific structures.

- IE INSTRUCTIONS Benchmark: A comprehensive benchmark of 32 datasets in standardized text-to-text formats for evaluating IE tasks.

Experimental Results

The experimental evaluation of InstructUIE covered supervised and zero-shot settings on numerous datasets across NER, RE, and EE tasks.

Supervised Settings

- Named Entity Recognition (NER): InstructUIE outperformed Bert on 17 out of 20 datasets, achieving an average F1 score of 85.19%. Particularly notable was the broad twitter dataset, where it surpassed Bert by 25 points.

- Relation Extraction (RE) and Event Extraction (EE): Results indicated competitive performance, with InstructUIE achieving higher F1 scores than UIE and USM on many datasets.

Zero-shot Settings

- NER: InstructUIE excelled over USM across most datasets, with improvements ranging between 5.21% to 25.27% in Micro-F1 scores.

- RE: InstructUIE outperformed ZETT on FewRel and Wiki-ZSL datasets by a margin of over 5% in F1 scores.

Implications and Future Directions

InstructUIE demonstrates the potential for instruction-tuned LLMs in effectively handling various IE tasks with limited task-specific data. The ability of InstructUIE to utilize multi-task learning frameworks opens up new possibilities for improving generalization across unseen tasks, especially in zero-shot contexts. Future developments in AI could explore enhancing instruction sets and adapting instruction tuning for a broader spectrum of natural language processing challenges. This approach also holds promise for reducing the dependency on extensive labeled datasets, expediting the deployment of IE systems in novel domains. Overall, InstructUIE represents a substantial step forward in the pursuit of universal models for information extraction.