Responsible Reporting of AI-generated Content: The AI Usage Cards Framework

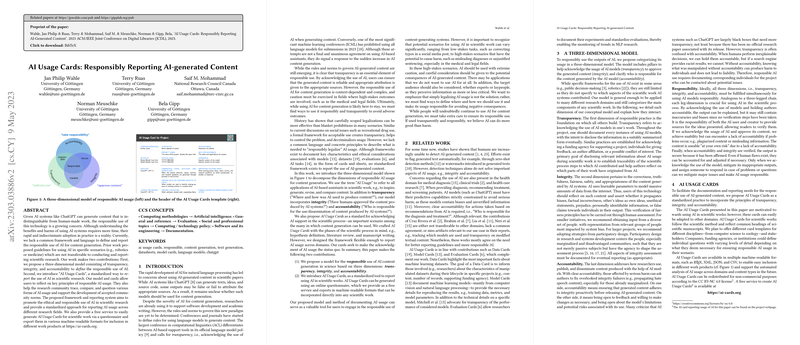

The paper "AI Usage Cards: Responsibly Reporting AI-generated Content," presents a systematic approach to addressing the challenges posed by AI-generated content in scientific research. The authors propose a novel framework comprising a three-dimensional model for responsible AI usage, alongside the introduction of AI Usage Cards—a standardized tool for reporting AI's role in producing scientific work.

Overview and Contributions

The paper identifies a critical gap in the existing frameworks, where practical guidelines are often domain-specific and lack transferability to scientific research. To bridge this gap, the authors introduce a model based on three interconnected principles: transparency, integrity, and accountability. This model aims to guide researchers in the ethical use of AI for content generation. Additionally, the paper offers a pragmatic solution through the development of AI Usage Cards. These cards serve as a means to document and communicate the extent and nature of AI's involvement in research activities.

The core contribution of this paper lies in establishing a structured means to enable researchers to reflect on AI usage's ethical aspects and to foster the development of community norms for responsible AI practices. The authors propose a standardized format for the AI Usage Cards, ensuring that they can be integrated into various research workflows across disciplines. Furthermore, they provide a dedicated website for creating these cards through an interactive questionnaire, facilitating broader adoption.

Analytical Model and Reporting Tool

The three-dimensional model proposed in the paper highlights:

- Transparency - It emphasizes acknowledging where and how AI is used within the research process, enabling traceability and openness concerning the contributions of AI systems.

- Integrity - This dimension involves human oversight to verify AI-generated content's correctness, ensuring outputs are free from biases, inaccuracies, and ethical concerns.

- Accountability - Clarifying responsibility for AI-generated outputs is crucial, especially regarding complex decisions that could impact individuals and society. The authors argue for designated accountable individuals who can address potential concerns and take corrective actions if required.

The AI Usage Cards are structured around key phases of scientific work—ideation, literature review, methodology development, experimentation, writing, and presentation. This systematic breakdown allows researchers to document AI's role throughout the research lifecycle, regardless of the domain.

Implications for the Research Community

The implications of adopting AI Usage Cards are both practical and theoretical. Practically, they establish a clear framework for reporting AI utility, promoting ethical AI use in research projects. Theoretically, they encourage a shift towards more transparent and accountable AI practices, allowing the research community to better understand the implications and limitations associated with AI-generated content.

The authors also discuss the importance of customizing AI Usage Cards to fit different disciplines' needs, suggesting that these cards could evolve to suit specific domains or project types, thereby ensuring their relevance and applicability over time. Furthermore, the potential for incorporating these cards into submission requirements for conferences and journals could standardize AI reporting, much like conflict of interest statements or funding acknowledgments.

Speculating on Future Developments

Future developments in AI usage reporting could entail more sophisticated methods for evaluating AI contributions in research. As AI models continue to evolve, it is plausible that frameworks like AI Usage Cards will need to accommodate more nuanced types of AI involvement. Furthermore, as machine-readable formats for these cards become more widely adopted, they may serve as valuable datasets for meta-analysis, examining trends in AI's role in research and informing policy decisions regarding AI ethics and governance.

As AI systems become entrenched in research ecosystems, frameworks like AI Usage Cards may play an integral role in fostering a culture of ethical AI application, emphasizing the need for responsible contributions that enhance human creativity and scientific inquiry without compromising ethical standards.