mPLUG-2: A Modularized Multi-modal Foundation Model Across Text, Image, and Video

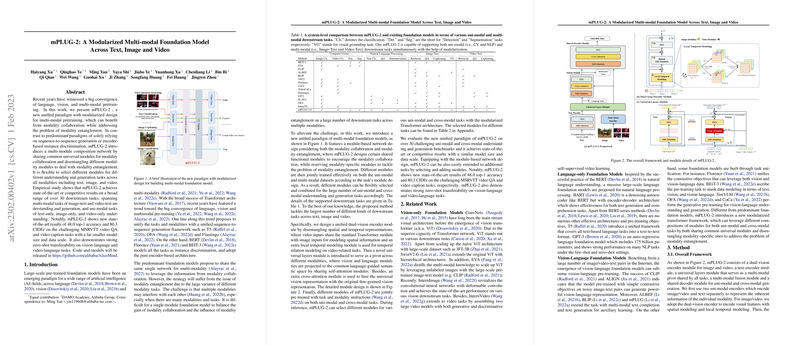

The paper introduces mPLUG-2, a multi-modal foundation model designed to integrate text, image, and video processing within a unified framework. Unlike traditional models focusing on monolithic architectures, mPLUG-2 emphasizes modularization, thus enhancing flexibility and adaptability of tasks across multiple modalities. The paper outlines its primary contributions, detailing a modular network design aiming to balance modality collaboration and entanglement.

Key Contributions

- Modular Design: mPLUG-2 leverages a novel architecture, incorporating universal and modality-specific modules. This design addresses modality entanglement while encouraging collaboration, allowing the model to generalize across a wide array of tasks.

- Unified Approach: The model supports uni-modal and cross-modal applications through a shared encoder-decoder architecture, incorporating specialized components such as dual-vision encoders for images and video, and text encoders. This strengthens mPLUG-2's ability to adapt to diverse data types and objectives.

- Empirical Evaluation: Tested on over 30 tasks, mPLUG-2 achieved state-of-the-art results across multiple benchmarks, including top-1 accuracy and CIDEr scores in challenging video QA and caption tasks. This highlights its competitive edge despite a smaller scale compared to counterparts.

- Zero-shot Transferability: The paper demonstrates robust zero-shot capabilities across vision-language and video-language tasks, which signifies potential for application in scenarios lacking domain-specific training data.

Numerical Results and Implications

mPLUG-2's empirical success is notable; it achieves a top-1 accuracy of 48.0 on MSRVTT video QA and 80.3 CIDEr on video caption tasks, showcasing significant improvements compared to previous models. Moreover, it maintains competitive performance on visual grounding, VQA, and image-text retrieval tasks. These results emphasize mPLUG-2's broad applicability and efficiency in resource usage.

Future Directions

The paper proposes further exploration into scaling mPLUG-2, expanding its modular components to handle additional modalities and tasks. Additionally, there is potential to integrate advanced attention mechanisms or incorporate external knowledge bases to enhance the contextual understanding of complex multi-modal tasks.

Conclusion

mPLUG-2 represents a significant step forward in multi-modal AI research, offering a flexible and efficient architecture that balances collaboration and entanglement across diverse modalities. By achieving state-of-the-art results in numerous tasks, it sets a precedent for future research and development in the domain of integrated multi-modal machine learning platforms. The availability of its codebase also encourages further experimentation and validation by the research community.