Overview of "Make-An-Audio: Text-To-Audio Generation with Prompt-Enhanced Diffusion Models"

The paper, "Make-An-Audio: Text-To-Audio Generation with Prompt-Enhanced Diffusion Models," addresses the challenges in generating audio content from textual prompts using deep generative models, specifically diffusion models. The significant contributions include overcoming two predominant obstacles: the scarcity of large-scale high-quality text-audio datasets, and the complexity of modeling long continuous audio sequences.

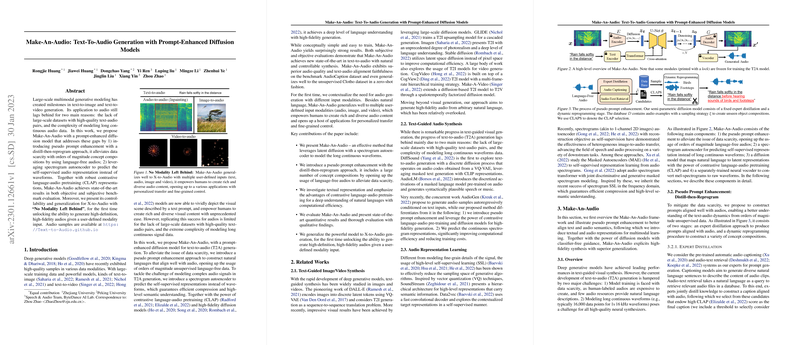

The authors propose a novel model called Make-An-Audio, which leverages a prompt-enhanced diffusion model framework. This framework incorporates a pseudo prompt enhancement strategy, utilizing a distill-then-reprogram approach to mitigate the lack of training data. The model also employs a spectrogram autoencoder, which facilitates the prediction of self-supervised spectral representations instead of raw waveform data, thereby improving computational efficiency and semantic comprehension.

Key Contributions

- Pseudo Prompt Enhancement: The authors address data scarcity by generating pseudo prompts through a distill-then-reprogram method. This approach uses unsupervised audios to create enhanced prompts, greatly expanding the possible data compositions and thereby enriching the dataset.

- Spectrogram Autoencoder: The model predicts audio representations via a spectrogram encoder-decoder architecture, which simplifies the learning task by representing audio as compressed latent variables. This method guarantees efficient signal reconstruction and high-level understanding.

- Contrastive Language-Audio Pretraining (CLAP): The use of CLAP representations allows the model to achieve robust language-to-audio mapping, facilitating understanding and alignment between text and audio modalities.

- Model Performance: Make-An-Audio demonstrates superior performance against existing models, achieving state-of-the-art results on both subjective and objective benchmarks. The model excels in synthesizing natural and semantically-aligned audio clips from textual descriptions.

- Cross-Modality Generation: This research is pivotal in extending audio generation capabilities to multiple user-defined modalities (text, audio, image, and video). Hence, Make-An-Audio opens new avenues in creating audio content, supporting applications in personalized content creation and fine-grained control.

Implications and Future Work

At a theoretical level, this work contributes to the body of knowledge surrounding multimodal generative models by demonstrating the efficacy of diffusion models in audio generation. Practically, the model's ability to exploit unsupervised data highlights an efficient path for deploying such systems where labeled datasets are scarce.

Future work could explore further optimization of diffusion models by reducing their computational demand, which although effective, is resource-intensive. Moreover, efficiency improvements could facilitate real-time applications, which is a critical consideration in interactive media solutions and audio-visual synchronization.

Progressing from this research, potential developments could focus on improving stability and reliability of generated outputs across various domains, including dynamic sound environments and diverse languages. Moreover, expanding the training datasets with real-world sounds and exploring transfer learning from other domains could enhance adequacy and generality of the solution.

Overall, this paper represents an advanced step in the automated synthesis of audio from text, providing a foundational platform for integrating audio generation in broader AI systems while paving the way for future research and applications in multi-modal AI.