Overview of "AudioLDM: Text-to-Audio Generation with Latent Diffusion Models"

The paper presents AudioLDM, a new text-to-audio (TTA) generation framework leveraging latent diffusion models (LDMs), underpinned by contrastive language-audio pretraining (CLAP). This approach aims to address challenges in synthesizing high-quality, computationally efficient audio from text descriptions, without relying extensively on paired text-audio datasets during training.

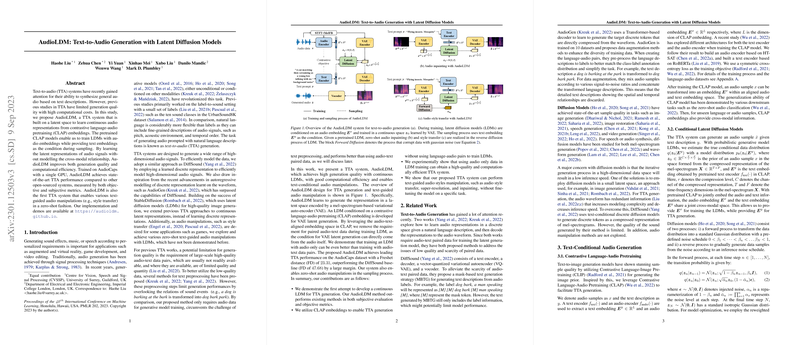

AudioLDM represents an advancement over previous TTA systems by learning continuous latent representations of audio signals, eschewing the modeling of cross-modal relationships. This is achieved by employing pre-trained CLAP models to extract audio and text embeddings. The AudioLDM framework first learns audio representations in a latent space embedded through variational autoencoders (VAEs) and subsequently utilizes LDMs conditioned on the CLAP embeddings for audio sample generation. Unlike prior models that heavily depend on large-scale, high-quality audio-text datasets—often constrained by their availability and quality—AudioLDM focuses on a more data-efficient model training process that can operate effectively even with audio-only data.

Methodology Highlights

- Contrastive Language-Audio Pretraining (CLAP): CLAP utilizes a dual encoder system to align audio and text representations in a shared latent space. This decoupling of text-audio relationships from generative model training allows AudioLDM to bypass text preprocessing and directly leverage rich semantic embeddings.

- Latent Diffusion Models (LDMs): Building on successful image-generation counterparts, LDMs are adapted for audio by generating representations in a smaller, computationally efficient latent space. Text conditions are introduced during sampling, providing explicit audio generation directives based on CLAP embeddings.

- Conditional Augmentation and Classifier-Free Guidance: Mixup strategies are employed for data augmentation without needing paired text descriptions. Additionally, classifier-free guidance improves the fidelity of conditional generation by modulating between conditional and unconditional noise predictions.

- Zero-Shot Audio Manipulation: The paper extends TTA capabilities to audio style transfer, super-resolution, and inpainting. These manipulations showcase the adaptability of AudioLDM in diverse audio content creation and modification scenarios without task-specific fine-tuning.

Experimental Validation

AudioLDM demonstrates superior performance over existing models like DiffSound and AudioGen. Notably, AudioLDM-S and AudioLDM-L, trained on AudioCaps—a smaller dataset—achieve state-of-the-art results across several metrics such as Frechet distance (FD), inception score (IS), and KL divergence. This underscores the efficacy of the latent diffusion approach combined with CLAP embeddings in realizing high-quality text-to-audio synthesis with lesser data requirement and computational resource.

Furthermore, AudioLDM's design excels in human evaluations, particularly in audio relevance and overall quality when compared against ground truth recordings. The experimental results reinforce the robustness of AudioLDM's architecture and its applicability in real-world audio content generation, making it a compelling candidate for tasks in entertainment, virtual environments, and content creation industries.

Future Implications and Developments

AudioLDM signifies a step forward in integrating LDMs with cross-modal embedding strategies like CLAP for TTA tasks. The broader implications suggest potential applications in automated content creation, enhancing accessibility technologies, and augmenting virtual experiences with synthesized audio components. Future research could further explore end-to-end training possibilities, leveraging larger and more diverse datasets, and improving sampling efficiency to unlock superior fidelity at higher sampling rates.

In summary, AudioLDM introduces a paradigm shift in TTA generation, not only by optimizing data utilization strategies but also by offering scalable, adaptable models suitable for varied audio generation paradigms. As audio synthesis continues to garner attention, frameworks like AudioLDM pave the way for more intuitive and expansive auditory AI applications.