Overview of "Tune-A-Video: One-Shot Tuning of Image Diffusion Models for Text-to-Video Generation"

The paper "Tune-A-Video: One-Shot Tuning of Image Diffusion Models for Text-to-Video Generation" presents a novel approach for text-to-video (T2V) generation leveraging existing text-to-image (T2I) diffusion models. The authors introduce an efficient method, Tune-A-Video, which facilitates T2V generation with substantially reduced computational resources by utilizing a one-shot video tuning approach. This paradigm shift addresses the traditional reliance on large-scale video datasets, providing an innovative pathway for T2V model training.

Methodology

The proposed method capitalizes on pre-trained T2I diffusion models, particularly using the latent diffusion models (LDMs) framework. By inflating 2D convolutional layers to accommodate spatio-temporal dimensions, the framework extends existing T2I models to support video data. Key to this extension is the introduction of a sparse spatio-temporal attention mechanism. This mechanism efficiently manages computational complexity by restricting attention to specific frames, addressing scalability issues in generating longer videos.

An essential element of the authors' approach is the fine-tuning of pre-trained models using a single text-video pair. The authors propose an attention tuning strategy that hones in on specific layer parameters critical for temporal consistency, thereby safeguarding the pre-existing knowledge of the T2I models. Furthermore, a structure guidance feature utilizing denoising diffusion implicit model (DDIM) inversion is employed to maintain temporal coherence across generated frames, ensuring that the synthesized videos are both smooth and logically sequential.

Results and Evaluation

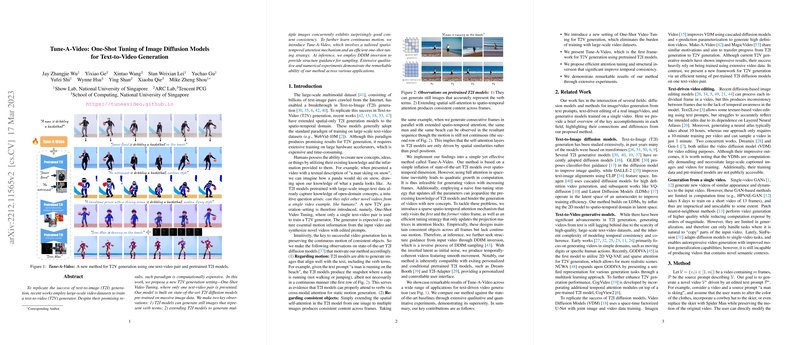

The experimental evaluation demonstrates the prowess of Tune-A-Video in producing coherent and consistent video outputs aligned with textual descriptions. The method outperforms several state-of-the-art baselines, including CogVideo and Text2LIVE, both in qualitative assessments and automatic metrics such as CLIP score. Notably, the authors detail various applications, such as object editing, background change, and style transfer, illustrating the versatility and adaptability of their approach.

Implications and Future Directions

This paper contributes significantly to practical and theoretical aspects of AI and multimedia generation. Practically, it opens opportunities for low-resource video synthesis, essential for applications in personalized content creation and media industries. Theoretically, it challenges conventional paradigms by proposing a novel framework that fuses T2I models into the video domain through strategic modifications, setting the stage for future explorations into multimodal generative AI.

Moving forward, the research highlights potential limitations, particularly in handling complex cases involving multiple objects and occlusions. Future work could explore enhancements in conditional input to address these challenges, potentially integrating additional data forms like depth maps to better manage occlusion effects and object interactions.

In summary, "Tune-A-Video" offers a compelling framework for efficient T2V generation, expanding the capabilities of diffusion models and paving the way for innovative applications in AI-driven video content creation.