An Evaluation of Retrieval-Augmented Multimodal LLMing

The paper under examination introduces a novel approach to multimodal LLMing, specifically through a retrieval-augmented framework denoted as RA-CM3. This investigation situates itself at the intersection of language and vision processing, extending the retrieval-augmented paradigm, originally acclaimed in NLP, into the broader context of multimodal data processing. The authors propose an innovative method for leveraging external memory to enhance the capabilities of models tasked with generating both text and images.

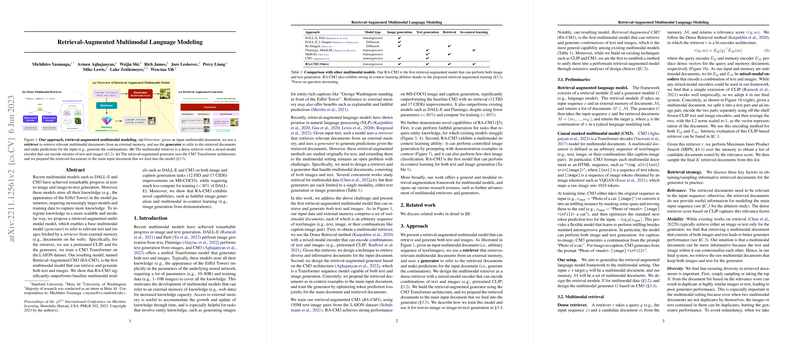

Overview of Approach

At the core of the proposed model is the retrieval-augmented multimodal LLM architecture that integrates a retrieval mechanism with a generative model. Distinct from existing models like DALL-E and CM3, which encapsulate knowledge within their parameters, RA-CM3 enhances this knowledge by dynamically fetching relevant text and images from an external memory source. This strategy effectively allows the model to sidestep the limitations imposed by model size and dataset breadth that typically constrain multimodal learners.

The multimodal retriever utilizes a pretrained CLIP model to discern and retrieve relevant documents from an external dataset such as LAION. The retrieved documents are then used to inform the generation process executed by a CM3 Transformer generator. This architecture allows RA-CM3 not only to outperform its baseline counterparts but also to achieve notable performance benchmarks while maintaining efficient computational and data demands.

Numerical Results and Performance

The authors underscore RA-CM3's superior performance through quantitative analysis using standard datasets like MS-COCO. The model demonstrates a substantial reduction in Fréchet Inception Distance (FID) by 12 points in image generation tasks and shows a 17-point improvement in the caption generation evaluation using the CIDEr metric. These metrics indicate a robust augmentation of capabilities in both image and text domains relative to traditional models and underscore the practical advantage of incorporating retrieval mechanisms.

The proposed model is largely resource-efficient, requiring less than 30% of the compute resources used by comparably performing models such as DALL-E. This is a crucial consideration given the expanding datasets and model sizes in the field.

Methodological Innovations and Implications

RA-CM3 introduces several methodological enhancements specifically tuned to multimodal retrieval and generation tasks. Key innovations include the design of a dense retriever that leverages a mixed-modal encoder, which efficiently processes combinations of text and images, enabling more informed generation outputs. The model's generator employs a novel loss optimization strategy that considers both the retrieved documents and the primary input, significantly enhancing training efficiency.

The work holds significant implications for future research and practical applications. By integrating retrieval with generation at this multimodal scale, models can maintain updated and contextually relevant knowledge. This capability is crucial for tasks demanding detailed world knowledge or the generation of entity-specific content, effectively bridging gaps left by static, parameter-fixed models. Moreover, the retrieval-centric design confers the additional benefits of generating explainable and verifiably accurate predictions, potentially advancing verifiability and interpretability in AI outputs.

Future Prospects

Considering the current trajectory of advancements in AI, the integration of retrieval-augmented capacities in multimodal modeling foretells a formative shift. It prompts new research directions, such as refining the retriever's accuracy or expanding its capacity across additional modalities beyond text and image. It also opens pathways towards enhancing model robustness and contextual adaptability, aligning more closely with real-world applications where knowledge is both large-scale and dynamic.

In essence, this paper represents a pivotal effort in evolving multimodal AI by synergizing retrieval-augmented strategies with multimodal generative processes, creating new paradigms for experiential and efficient AI modeling.