Holistic Evaluation of Language Models

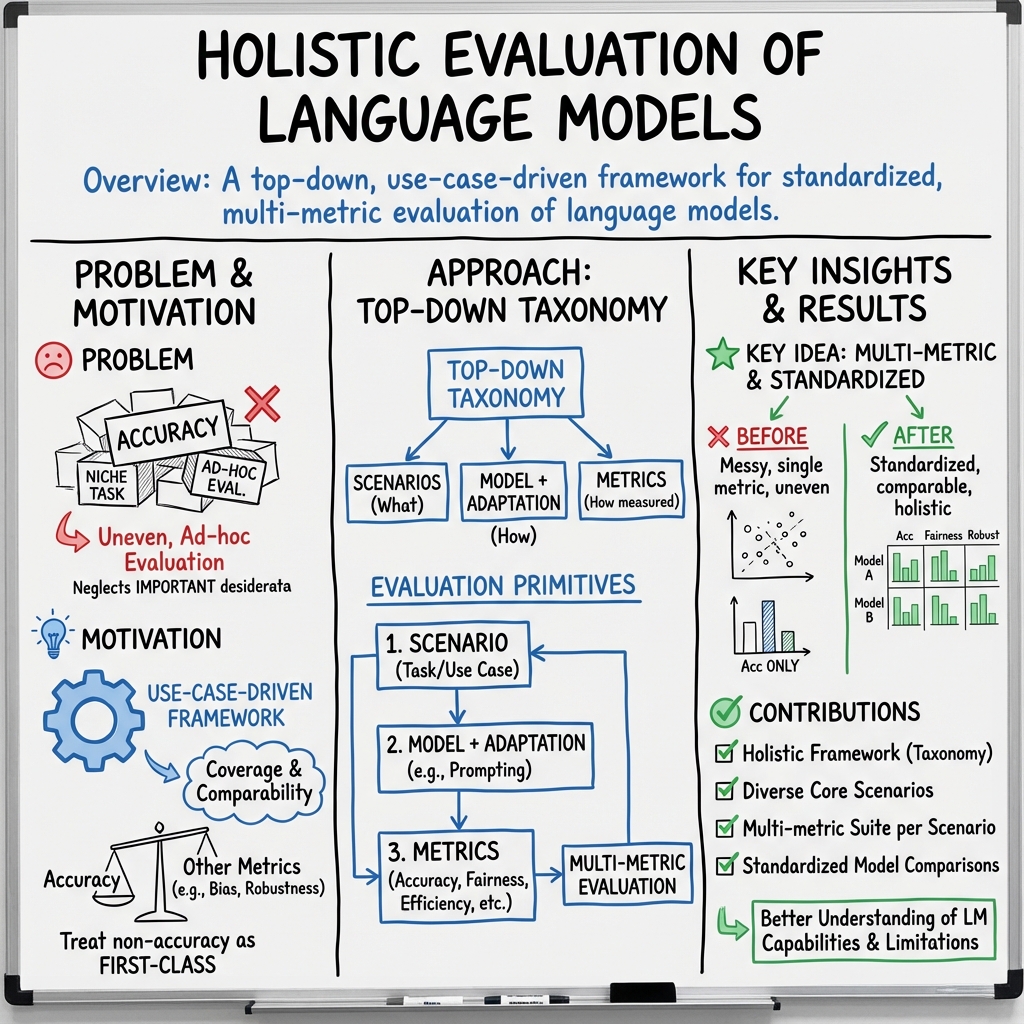

Abstract: LLMs (LMs) are becoming the foundation for almost all major language technologies, but their capabilities, limitations, and risks are not well understood. We present Holistic Evaluation of LLMs (HELM) to improve the transparency of LLMs. First, we taxonomize the vast space of potential scenarios (i.e. use cases) and metrics (i.e. desiderata) that are of interest for LMs. Then we select a broad subset based on coverage and feasibility, noting what's missing or underrepresented (e.g. question answering for neglected English dialects, metrics for trustworthiness). Second, we adopt a multi-metric approach: We measure 7 metrics (accuracy, calibration, robustness, fairness, bias, toxicity, and efficiency) for each of 16 core scenarios when possible (87.5% of the time). This ensures metrics beyond accuracy don't fall to the wayside, and that trade-offs are clearly exposed. We also perform 7 targeted evaluations, based on 26 targeted scenarios, to analyze specific aspects (e.g. reasoning, disinformation). Third, we conduct a large-scale evaluation of 30 prominent LLMs (spanning open, limited-access, and closed models) on all 42 scenarios, 21 of which were not previously used in mainstream LM evaluation. Prior to HELM, models on average were evaluated on just 17.9% of the core HELM scenarios, with some prominent models not sharing a single scenario in common. We improve this to 96.0%: now all 30 models have been densely benchmarked on the same core scenarios and metrics under standardized conditions. Our evaluation surfaces 25 top-level findings. For full transparency, we release all raw model prompts and completions publicly for further analysis, as well as a general modular toolkit. We intend for HELM to be a living benchmark for the community, continuously updated with new scenarios, metrics, and models.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Overview: What Is This Paper About?

This paper is about finding a better, fairer way to test and compare LLMs—computer programs like chatbots that read and write text. Instead of checking only “how often they get the right answer,” the authors want to judge these models in a more complete way, considering many different qualities that matter in real-life use.

Key Questions the Paper Tries to Answer

The paper asks simple but important questions:

- What exactly should we test when we judge a LLM?

- How can we organize these tests so they make sense for different real-world uses (like answering questions, summarizing, or helping write code)?

- How do we measure many things (not just accuracy), like safety, fairness, speed, cost, and reliability?

- How can we compare different models under the same conditions so the results are fair and useful?

How They Did It (Methods, Explained Simply)

The authors use a “top-down” plan, which is like building the rules of a game before playing it. Instead of randomly picking tests, they first define a clear structure—called a taxonomy—to organize what gets tested and how.

Think of it like creating a well-labeled library:

- “Scenarios” are the shelves (the specific tasks or situations we want the model to handle, like summarizing a news article or answering a science question).

- “Metrics” are the stickers on the books (the scores we give to judge performance—accuracy, safety, fairness, speed, cost, and more).

- “Models with adaptation” is how each book is prepared for the shelf (how the model is set up or prompted to handle a scenario).

They call these the “evaluation primitives,” which just means the basic building blocks of any test:

- Scenario: what we want the model to do

- Model + adaptation: the model we use and how we prepare it for the task

- Metrics: how we score the model’s performance

With this setup, they run many models on many scenarios and score them using multiple metrics, all under the same conditions. This makes comparisons fair and consistent.

Main Findings and Why They Matter

- Standardization: Before this work, different models were tested in uneven ways, and many important tasks didn’t have consistent evaluations. After their work, more models were tested across more scenarios using the same rules, so results are easier to compare.

- Multi-metric testing: Accuracy alone isn’t enough. A model might be accurate but slow, expensive, unsafe, or biased. The paper shows it’s important to judge models across multiple metrics because what matters depends on the use case. For example, a customer support bot needs to be safe, reliable, and fast—not just accurate.

- Clear taxonomy: By clearly defining scenarios and metrics, it becomes obvious what tests exist and what’s missing. This helps researchers focus on gaps, like scenarios that haven’t been tested yet or qualities (like fairness or robustness) that need better measurement.

These findings matter because they make LLM evaluation more complete, fair, and useful for people who want to pick the right model for their needs.

What This Means for the Future

This work helps everyone who uses or builds LLMs:

- Users can choose models that fit their real needs (not just the one with the highest accuracy).

- Developers can see where their models are strong or weak and improve them (for example, making them safer or faster).

- Researchers and the community get a shared way to test models, which speeds up progress and transparency.

- As LLMs become part of everyday tools, this kind of careful evaluation helps make AI more trustworthy and responsible.

In short, the paper pushes the field toward fair, practical, and complete testing—so we understand not just whether a model is “smart,” but whether it is also safe, reliable, and right for the job.

Collections

Sign up for free to add this paper to one or more collections.