Overview of AfroLM: A Multilingual Pretrained LLM for African Languages

The paper "AfroLM: A Self-Active Learning-based Multilingual Pretrained LLM for 23 African Languages" presents an innovative approach towards enhancing NLP capabilities in low-resource language settings. Central to this paper is AfroLM, a multilingual LLM designed to support 23 African languages, making it the most extensive model of its kind to date. The research introduces a self-active learning framework that significantly improves the data efficiency and performance of LLMs, particularly for languages where large-scale training datasets are unavailable.

Key Contributions

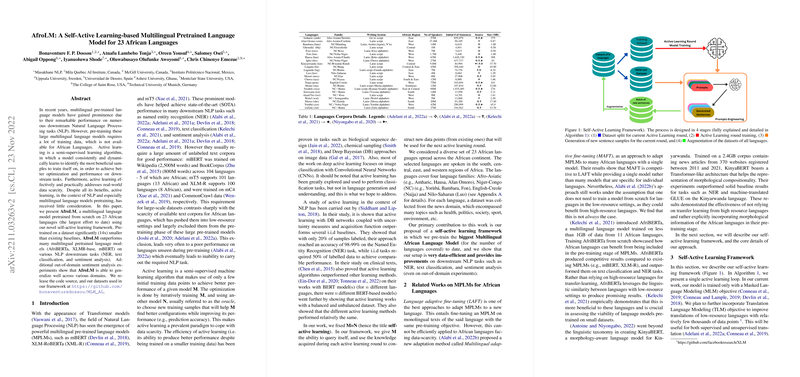

- Self-Active Learning Framework: The novel self-active learning framework allows the model to iteratively expand its training data from a small initial dataset. Unlike traditional active learning where a separate oracle model is used, AfroLM employs the same model for both learning and querying, thus simplifying the training process and reducing computational complexity.

- Robust Performance on Downstream Tasks: AfroLM demonstrated superior performance in various NLP tasks, such as named entity recognition (NER), text classification, and sentiment analysis, outperforming earlier models like AfriBERTa, XLM-RoBERTa, and mBERT. This is remarkable given that AfroLM was pretrained on a dataset 14 times smaller than used by these baselines.

- Diverse Language Coverage: The model's support for 23 African languages, including widely spoken languages like Swahili and less-resourced ones like Fon and Ghomálá', addresses the linguistic diversity of the African continent. This supports more inclusive technology development, recognizing the linguistic needs of millions of speakers.

Experimental Evaluation and Results

In terms of technical accomplishments, the model showcases its effectiveness through a series of robust experiments:

- NER Performance: AfroLM with active learning outperformed AfriBERTa and compared competitively with models trained on significantly larger datasets. Its ability to generalize across different languages and perform well in out-of-domain sentiment analysis tasks further underscores the utility of the self-active learning framework.

- Data Efficiency: The research provides empirical evidence that the self-active learning framework leads to substantial performance improvements even with limited data availability, which is a critical consideration for many low-resource languages.

Implications and Future Work

The demonstrated efficacy of AfroLM has profound implications for the development of NLP applications tailored to African languages. By enhancing data efficiency and reducing the reliance on large datasets, this framework could facilitate broader adoption and development of language technology tools for underrepresented languages globally.

For future research, the authors suggest exploring the relationship between the number of active learning rounds and model performance to further fine-tune the efficiency of the framework. Additionally, incorporating a weighted loss function and diverse sample generation techniques could further stabilize and enrich the training process.

Conclusion

This paper makes a significant stride in the field of multilingual LLMing by addressing challenges associated with low-resource settings. The AfroLM model and its underlying self-active learning framework offer a promising pathway for improving NLP tasks across diverse languages with minimal data requirements. Consequently, it represents a vital step towards more equitable access to language technologies for speakers of African languages.