Overview of "Chinese CLIP: Contrastive Vision-Language Pretraining in Chinese"

The paper entitled "Chinese CLIP: Contrastive Vision-Language Pretraining in Chinese" presents an innovative approach to adapting foundational vision-LLMs, specifically CLIP, for Chinese language-based applications. The authors propose a two-stage pretraining strategy that addresses the challenges of transferring cross-modal models to language-specific scenarios.

Methodology

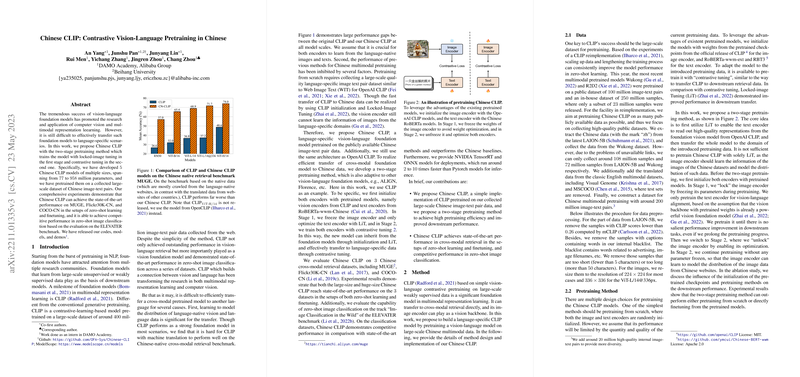

The paper introduces Chinese CLIP, a model trained using a novel pretraining method. This involves two primary stages: the first stage employs locked-image tuning (LiT), where only the text encoder is optimized while the image encoder remains frozen; the second stage involves contrastive tuning, where both encoders are optimized. The authors initialize their model using weights from existing, pretrained models—CLIP's vision encoder and RoBERTa-wwm-Chinese for the text encoder.

The dataset for pretraining consists of approximately 200 million Chinese image-text pairs, curated from sources such as LAION-5B and Wukong datasets. This large-scale data collection is essential for effective vision-language alignment.

Experimental Results

The paper evaluates the performance of Chinese CLIP across several benchmarks: MUGE, Flickr30K-CN, and COCO-CN. Chinese CLIP demonstrates state-of-the-art results in both zero-shot learning and finetuning setups, often outperforming existing models like Wukong and R2D2. Notably, the performance on zero-shot image classification is validated on translated datasets from the ELEVATER benchmark, reflecting the model’s capability in handling open-domain visual tasks.

Key Findings

- Model Variants: Five Chinese CLIP models were developed, ranging from 77 to 958 million parameters. The scaling of model size correlated positively with performance improvements across tasks.

- Pretraining Efficiency: The two-stage pretraining method proved crucial, outperforming training from scratch and single-stage tuning in various downstream tasks.

- Deployment: The authors provide implementations adaptable to ONNX and TensorRT frameworks, significantly optimizing inference speed, which supports practical applications.

Implications and Future Work

The implications of this research are multifaceted. Practically, the development of Chinese CLIP opens new avenues for deploying vision-LLMs in Chinese-specific digital ecosystems, enhancing applications such as e-commerce and social media content understanding. Theoretically, the two-stage pretraining approach could inform methodologies for adapting foundation models to other languages or specialized domains.

The paper acknowledges limitations, notably the scale of the training dataset compared to large-scale models like Florence and PaLI. Future research directions include scaling both dataset size and model parameters to further enhance performance. Additionally, exploring efficient model distillation could make these powerful models accessible for resource-constrained environments.

Conclusion

In summary, this paper represents a significant step in the adaptation of vision-LLMs for Chinese applications. The proposed two-stage pretraining paradigm and the subsequent performance achievements underscore the potential benefits of such methodologies in bridging language-specific gaps in AI models.