Multi-modal Prompt Learning (MaPLe) for Vision-LLMs

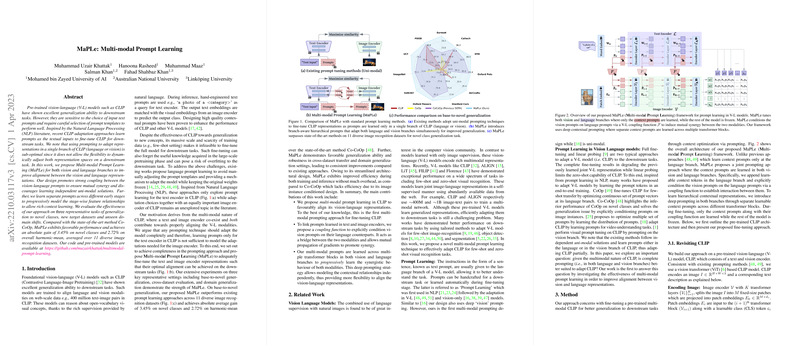

The research paper titled "MaPLe: Multi-modal Prompt Learning" addresses the challenge of adapting large-scale vision-language (V-L) models such as CLIP for downstream tasks. Previous approaches have primarily focused on uni-modal prompt learning, either in the vision or language branch. This paper introduces a novel method, MaPLe, which integrates multi-modal prompt learning to enhance the alignment and synergy between vision and language representations.

Background

CLIP and similar V-L models are pre-trained on extensive datasets, aligning language and image modalities. These models offer excellent generalization capabilities, but their efficacy in specific downstream tasks is often hindered by their sensitivity to input text prompts and the vast size of V-L models. Traditional approaches of manual prompt crafting or isolated uni-modal prompt learning, such as CoOp and Co-CoOp, either led to suboptimal adaptation or compromised generalization to novel classes.

Methodology

MaPLe introduces a comprehensive prompting strategy that incorporates prompts in both image and text encoders, fostering a dynamic adaptation of both modalities. Key features of the methodology include:

- Multi-modal Prompt Learning: Unlike previous uni-modal approaches, MaPLe utilizes prompts in both branches, ensuring complete and synergistic adaptation.

- Hierarchical and Deep Prompting: Prompts are introduced across multiple transformer blocks. This hierarchical method leverages different feature hierarchies to progressively refine contextual representations.

- Vision-Language Coupling: A coupling function dynamically conditions vision prompts on language prompts, facilitating bi-directional interaction and mutual gradient propagation, thereby improving the synergy between the modalities.

Results

The paper presents extensive evaluations across 11 diverse image recognition datasets, demonstrating that MaPLe consistently outperforms existing methods in several scenarios:

- Generalization: MaPLe achieves an absolute gain of 3.45% on novel classes and 2.72% on the harmonic mean compared to the state-of-the-art Co-CoOp.

- Cross-dataset Evaluation: When tested on datasets unseen during training, MaPLe achieves the highest average accuracy, highlighting its robust generalizability.

- Domain Generalization: MaPLe exhibits superior robustness against domain shifts, further validating its efficacy in diverse real-world applications.

Implications

The introduction of MaPLe marks a significant step towards more efficient adaptation of V-L models. Its multi-modal design caters to both branches of CLIP, embodying a more holistic learning approach. This can lead to improved performance in tasks involving rare or less generic categories, indicating better handling of dataset divergence from mainstream image collections such as ImageNet.

Future Directions

The insights from MaPLe open up several avenues for future research:

- Exploration of alternative coupling mechanisms that further enhance interaction and synergy between modalities.

- Investigation of multi-modal prompting effects on other V-L tasks outside the domain of image recognition.

- Enhancement of prompt initialization strategies and understanding their impact on model fine-tuning.

In conclusion, MaPLe presents a compelling framework that holistically adapts V-L models for specific tasks, addressing shortcomings of previous methods through comprehensive multi-modal prompt learning.