Introduction

The recently developed Multimodal Retrieval-Augmented Transformer (MuRAG) is a significant advance in open question answering (QA) models involving both visual and textual data. This novel approach is designed to address the limitations of existing LLMs that solely rely on textual knowledge, often at the expense of overlooking the vast amount of information contained in visual data. MuRAG integrates a non-parametric multimodal memory, paving the way for more sophisticated information retrieval across different data forms.

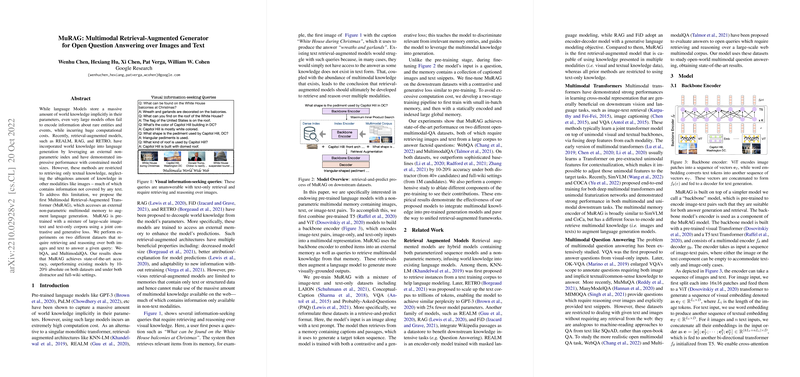

Pre-training and Model Architecture

MuRAG is constructed upon a backbone consisting of pre-trained T5 and ViT models, enabling the handling of both text and images. Its pre-training process involves the use of diverse datasets, including LAION, Conceptual-Caption, VQA, and PAQ, to teach the model to augment language generation with external knowledge. Besides, it employs a dual-loss structure, including contrastive and generative aspects, which concurrently optimizes retrieval accuracy and generative performance.

Datasets and Fine-tuning

Evaluated on both WebQA and MultimodalQA datasets, MuRAG has demonstrated its proficiency. The model's ability to generate accurate, visually-grounded outputs was particularly notable in scenarios requiring both text and image comprehension for knowledge retrieval. A two-stage fine-tuning approach was leveraged, first utilizing an in-batch memory followed by training on a statically encoded global memory, optimizing the model for the final tasks.

Performance and Insights

MuRAG's performance significantly exceeds that of existing baseline models, especially in tasks involving full-wiki settings. While it has showcased an outstanding ability to incorporate external multimodal knowledge, there still exist challenges in purely image-centric queries. In-depth human analysis categorizes the model's errors, noting particular difficulty with counting and object recognition.

Conclusion

In summary, MuRAG marks an important step forward in multimodal open-domain QA tasks. Despite current limitations, its achievements underscore the model's potential as a streamlined and extendable solution for integrating multimodal data into pre-trained LLMs. This approach not only expands the knowledge base available to QA systems but also introduces a more context-aware and enriched data processing capability. Future work may focus on aligning pre-training objectives more closely with downstream applications to further enhance model performance.