- The paper introduces Decomposed Prompting (DecomP), a method that modularizes complex tasks into sub-tasks to enhance large language model efficiency.

- It employs a decomposer and specialized sub-task handlers to assign and optimize components of a task, surpassing traditional Chain-of-Thought methods.

- Experimental results on long-context QA and arithmetic reasoning tasks show significant accuracy gains and scalability across diverse applications.

Decomposed Prompting: A Modular Approach for Solving Complex Tasks

This paper introduces Decomposed Prompting (DecomP), a modular approach designed to enhance the performance of LLMs on complex tasks by decomposing them into simpler sub-tasks. The central idea is to use prompting to structure tasks in a manner similar to software engineering, allowing each sub-task to be optimized individually, thereby improving overall system efficacy.

Motivation and Background

Conventional few-shot prompting techniques for LLMs, such as Chain-of-Thought (CoT), face limitations when handling complex tasks. These approaches primarily rely on providing LLMs with elaborate reasoning chains within prompts, which is often inadequate for intricate tasks or those with lengthy inputs. CoT prompts have shown potential in capturing reasoning steps, but their effectiveness diminishes as task complexity escalates.

To address these shortcomings, DecomP introduces a hierarchical system where tasks are decomposed into modular sub-tasks, each handled by a specialized LLM or symbolic function. This approach allows for more focused and efficient learning and execution by leveraging specific models and prompts tailored to each sub-task.

Decomposed Prompting Framework

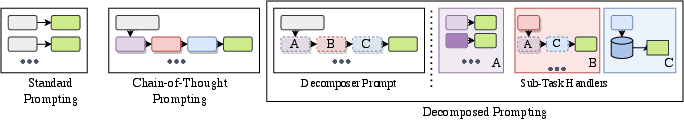

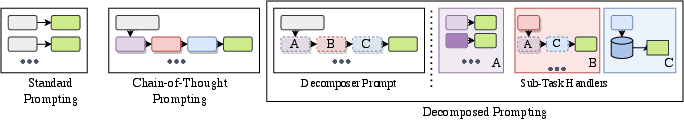

The DecomP framework employs a decomposer and several sub-task handlers, where the decomposer determines the sequence of sub-tasks required to solve a complex problem, and each sub-task is executed by a dedicated handler.

- Decomposer: It frames the overarching solution and delegates appropriate questions to sub-task handlers.

- Sub-task Handlers: These can be prompts tailored to LLMs or symbolic functions like Elasticsearch for retrieval tasks, which handle specific parts of the problem.

This modularity allows incorporating external tools and knowledge bases when a sub-task exceeds the LLM’s capabilities, for example, by using a retrieval system for document fetching (Figure 1).

Figure 1: Chain-of-Thought prompting provides reasoning steps, while Decomposed Prompting uses a decomposer prompt for procedures handling distinct sub-tasks.

Implementation and Methodology

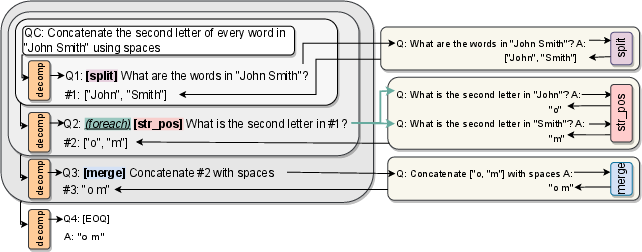

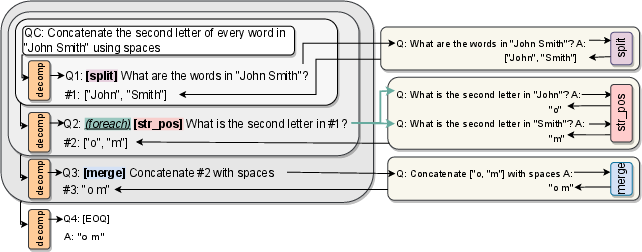

In the DecomP system, the task is first entered into a decomposer prompt that outputs the sequence of sub-tasks (Figure 2). Each sub-task is then executed by its respective handler—in some cases another prompt, in others, a symbolic function. The decomposer operates iteratively, starting from the base question and using historical inputs and outputs to inform succeeding questions, halting when a final solution is reached (Figure 3).

Figure 2: Prompts used for decomposing tasks into sub-tasks. They include procedures for split and merge sub-tasks, enabling flexible and reusable prompt structures.

Figure 3: Inference in DecomP iterates prompts, generating subsequent questions and sub-task determinations until final prediction attainment.

Case Studies and Evaluation

DecomP was tested across several domains—symbolic manipulation, list reversal, long-context question answering (QA), and open-domain multi-hop QA—demonstrating robust improvement compared to CoT and least-to-most prompting. A notable improvement was observed with long sequence and generalizability over unseen compositions, showing significant gains on QA tasks from the HotpotQA and MuSiQue datasets.

For instance, DecomP improved solving open-domain QA by integrating Elasticsearch for document retrieval, effectively dealing with scale and complexity beyond typical LLM capacities (Figure 4).

Figure 4: Open-domain multihop question answering prompt employs Elasticsearch retrieval integrated into the DecomP framework.

Furthermore, DecomP's ability to handle recursion enabled efficient task decomposition, as evidenced by tests on list reversal tasks and enhanced arithmetic reasoning capabilities on the MultiArith dataset, showcasing high accuracy improvements.

Discussion and Conclusion

DecomP provides a structured, modular alternative for complex task solutions using LLMs. Its modular design, like software libraries, allows for scalability, maintainability, and incremental improvements without overhauling the entire system. This flexibility makes it promising for broad application scenarios beyond the scope tested.

Future directions could involve extending DecomP's concepts to integrate more sophisticated symbolic processes or adapting it to more diverse AI challenges leveraging broader architectures. The potential for increased efficiency and accuracy in AI task performance exhibited by DecomP signals its prospective value in AI system design and deployment.