News Summarization and Evaluation in the Era of GPT-3: An Expert Overview

The paper under examination presents a comprehensive paper into the evolving landscape of text summarization, particularly focusing on the role of LLMs such as GPT-3 in news summarization. This research evaluates the efficacy of GPT-3 in generating news summaries and compares its outputs to those from fine-tuned specialized models. The authors conduct a split analysis—assessing summary quality across generic, keyword-focused, and aspect-based summarization settings, while simultaneously exploring the adequacy of existing automatic evaluation metrics for capturing the quality of GPT-3-generated summaries.

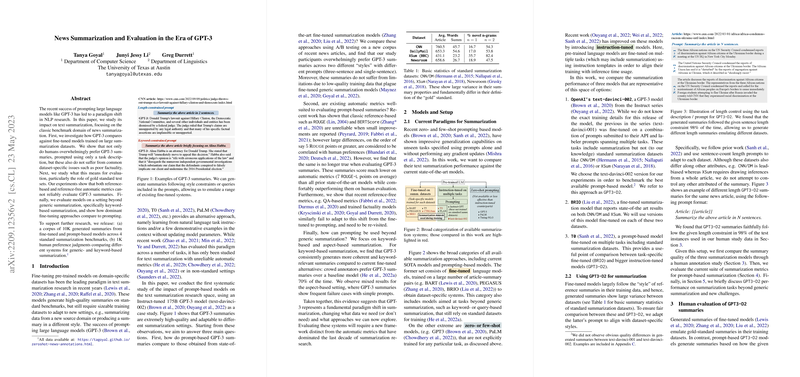

The research investigates three principal questions: the comparative quality of GPT-3-generated summaries versus state-of-the-art fine-tuned models, the suitability of current automated evaluation metrics for assessing these summaries, and the capabilities of prompt-based models beyond generic summarization tasks.

Evaluation and Comparison of Summarization Systems

The research provides empirical evidence indicating that human evaluators consistently prefer GPT-3 summaries over those generated by fine-tuned models. The GPT-3 summaries were found to be more coherent and contextually comprehensive, often tailored to the specified prompt styles, such as varying summary length and keyword emphasis. A significant finding was the model's ability to maintain high abstraction without sacrificing factuality, a common issue in dataset-specific fine-tuned models.

Analysis of Automatic Evaluation Metrics

The paper also critically examines the reliability of existing evaluation metrics, such as ROUGE and BERTScore, in the context of GPT-3-generated content. The findings suggest that these metrics, traditionally used for reference-based evaluation, fail to reflect human evaluators' preferences when assessing the quality of GPT-3 summaries. The metrics inaccurately score GPT-3 outputs, highlighting a discrepancy due to the model’s deviation from the reference summary style. This calls into question the current framework used for automated summary evaluation, suggesting a need for revised methodologies that can encapsulate the diversity and quality engendered by LLMs.

Keyword and Aspect-Based Summarization

Another focus of the paper is summarization tailored to specific features like keywords and aspects of articles. The investigation of keyword-focused summarization tasks demonstrated that GPT-3 outperformed fine-tuned models such as CTRLSum. The adaptability of GPT-3, facilitated by its capability to follow prompt-based instructions, underscored its effectiveness in generating summaries that accurately capture the specified keyword context. For aspect-based summarization, however, the performance was less consistent, illustrating potential limitations when high-level thematic aspects were not explicitly present in the source text.

Implications and Future Directions

The implications of this research are multifaceted. Practically, it propels the notion that future text summarization research can leverage large pre-trained LLMs, even in domains where traditional models necessitate significant fine-tuning on fixed datasets. This paradigm shift reduces dependency on large labeled datasets, offering scalability for different summarization styles tailored to user or domain-specific needs.

Theoretically, this work challenges the foundational constructs of automatic evaluation in text summarization, advocating for the development of metrics sensitive to the inherent qualities of summaries produced by generative models. Currently, metrics evaluated in this paper do not adequately capture this shift, necessitating explorations into newer evaluation frameworks that align more closely with human judgment.

Finally, the research delineates future prospects for AI development in text summarization, notably how to harness the adaptability of models like GPT-3 for applications beyond conventional tasks—such as dynamic, context-aware summarization across diverse content types and languages. As such, future investigations should focus on refining model prompts and improving evaluation methods, perhaps including learning-based evaluation paradigms that natively accommodate natural language nuances and human preferences.

In summary, the paper presents a methodical dissection of GPT-3's impact on text summarization and extends an invitation for reevaluating established methodologies and metrics, guiding the field towards a more inclusive and adaptive framework for text generation assessment.