BEiT-3: A Multimodal Foundation Model for Vision and Vision-Language Tasks

The paper "Image as a Foreign Language: BEiT Pretraining for All Vision and Vision-Language Tasks" explores the increasing convergence of language and vision pretraining frameworks. The authors introduce BEiT-3, a general-purpose multimodal foundation model demonstrating state-of-the-art performance across various vision and vision-language tasks. The paper builds upon three core aspects: backbone architecture, pretraining methods, and model scalability.

Core Contributions

- Unified Architecture: Multiway Transformers

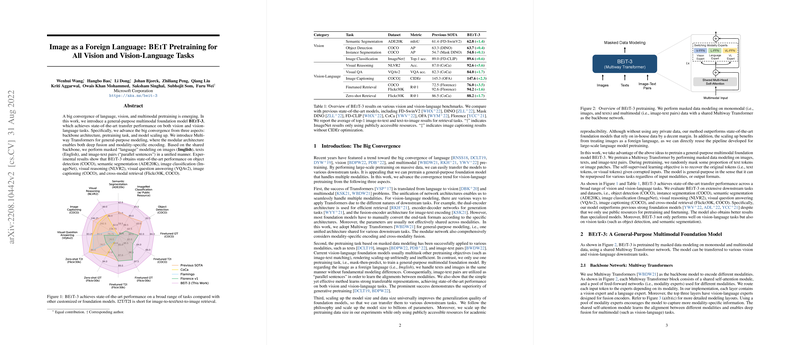

BEiT-3 employs a Multiway Transformer architecture that facilitates both modality-specific encoding and cross-modal information fusion. This unified architecture allows BEiT-3 to be a versatile model, adaptable for tasks including vision-specific modes (images) and complex vision-language tasks (image-text pairs). Multiway Transformers include shared self-attention modules and modality-specific feed-forward networks, offering a flexible yet comprehensive encoding mechanism.

- Mask-then-Predict Pretraining

The model advances pretraining with a generalized masked data modeling approach, applied uniformly to images, texts, and image-text pairs. By conceptualizing images as a foreign language, the model learns through a masked "language" modeling framework, which unifies the handling of images and texts under a common paradigm. This single-task approach enhances efficiency and scalability, contrasting with prior models that required multiple pretraining tasks.

- Scalability in Model and Data

By scaling up to billions of parameters and leveraging extensive public datasets, BEiT-3 achieves superior generalization, outperforming numerous state-of-the-art models. The scalability aspect is also reflected in its use of publicly accessible datasets, demonstrating its practicality and reproducibility within the academic community.

Experimental Validation

The experimental evaluation of BEiT-3 covers a wide range of vision and vision-language benchmarks:

- Vision Tasks: The model excels in object detection, instance and semantic segmentation, and image classification, as evidenced by its performances on COCO, ADE20K, and ImageNet datasets. For instance, in object detection tasks on COCO, BEiT-3 achieves 63.7 AP, marking a significant lead over previous models.

- Vision-Language Tasks: BEiT-3 leads in visual reasoning, question answering, and image captioning, with marked improvements such as a 5.6% accuracy gain on the NLVR2 dataset. The model also sets benchmarks in image-text retrieval tasks on COCO and Flickr30K, indicating its robust cross-modal alignment capabilities.

Potential Implications and Future Directions

The paper highlights several theoretical and practical implications:

- Generative Pretraining Recognized: BEiT-3's success showcases the effectiveness of generative pretraining across modalities, which could inform the design of future multimodal learning systems.

- Architectural Unification: The unified handling of different modalities through Multiway Transformers may encourage further exploration into modular architectures for power-efficient, scalable models.

- Scalability and Accessibility: Demonstrating top-tier capabilities using publicly accessible datasets suggests a shift towards more open-science approaches in AI research.

Looking ahead, the paper hints at extending BEiT-3’s architecture to accommodate more modalities, such as audio, and enhancing multilingual support. This further convergence could pioneer new developments in universal models capable of handling all data forms seamlessly, thereby driving a new wave of AI applications across diverse domains.

In conclusion, BEiT-3 represents a substantial advancement in the field of vision and vision-language tasks. Its innovative approach and impressive empirical results suggest a promising avenue for future research in multimodal AI systems.