Uni-Perceiver-MoE: Addressing Task Interference in Generalist Models

The pursuit of generalist models that can efficiently handle a diverse array of tasks across multiple modalities is a prominent goal within the machine learning domain. The paper "Uni-Perceiver-MoE: Learning Sparse Generalist Models with Conditional MoEs" presents significant strides in this direction by addressing the prevalent issue of task interference, which often diminishes the performance potential of generalist models that share parameters across tasks.

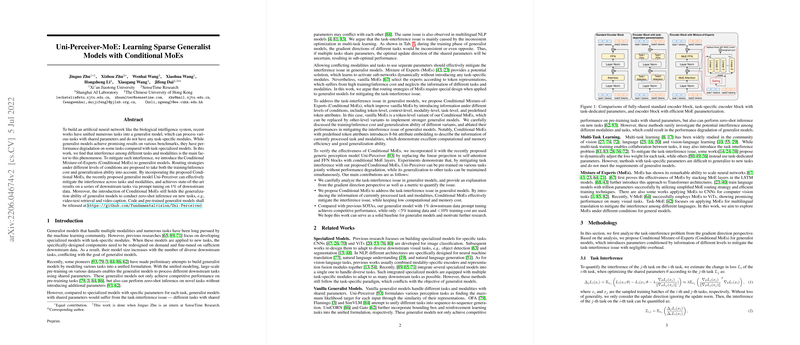

Despite the promise of generalist models to unify tasks without task-specific modules, they frequently encounter task interference, wherein shared parameters lead to conflicting task performance. This paper attributes the degradation in performance primarily to inconsistent optimization across tasks during training. By incorporating Conditional Mixture-of-Experts (Conditional MoEs) into the foundational architecture of Uni-Perceiver, a recently proposed generalist model, the authors propose a novel routing strategy to mitigate such interference effectively.

Conditional MoEs dynamically allocate computational resources among a set of expert models, thus allowing for specialized sub-network activations without task-specific designs. These expert selections are conditioned not only on token representations but on additional contextual and task-level information. Specifically, the paper explores several routing strategies: token-level, context-level, modality-level, task-level, and the innovative attribute-based approach, ultimately favoring the latter for its balance of efficiency, computational cost, and generalization capability.

The inclusion of Conditional MoEs in generalist models offers several advantages:

- Improved Task Performance: Conditional MoEs successfully alleviate the task interference inherent in shared-parameter models, leading to superior performance across diverse benchmarks as demonstrated in the Uni-Perceiver-MoE.

- Enhanced Generalization: Attribute-based routing provides Uni-Perceiver-MoE with robust generalization capabilities for zero-shot inference. It facilitates seamless performance not only on pre-trained tasks but also on unforeseen tasks, such as video-text retrieval and video captioning.

- Efficient Training and Inference: The sparse activation of experts in Conditional MoEs substantially reduces both computational and memory burdens during training and inference compared to dense models, thereby enhancing scalability.

Empirical evaluations revealed that the integration of Conditional MoEs allowed Uni-Perceiver to achieve state-of-the-art results on various modalities with minimal downstream data. This capability was highlighted using only 1% of downstream data, significantly reducing training costs compared to existing methods.

Looking forward, this paper's contributions pave the way for future investigations into optimizing routing mechanics and extending these innovations to additional complex tasks across richer modality spaces. The development of efficient, scalable, and less resource-intensive generalist models presents a compelling avenue for future research in artificial intelligence, with potential applications spanning from multilingual translation models to large-scale perception systems.

In conclusion, "Uni-Perceiver-MoE: Learning Sparse Generalist Models with Conditional MoEs" effectively addresses a critical challenge in the development of generalist AI models. By resolving task interference with conditional expert selection and enhancing performance across a spectrum of tasks, it contributes a significant advancement towards realizing adaptive, efficient, and versatile AI systems.