Overview of CoCa: Contrastive Captioners as Image-Text Foundation Models

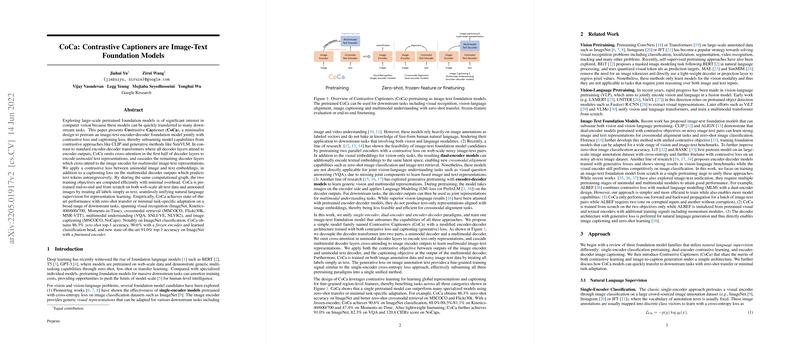

The paper "CoCa: Contrastive Captioners are Image-Text Foundation Models" introduces CoCa (Contrastive Captioners), a foundation model framework adept at integrating image-text modalities through contrastive and generative learning objectives. In doing so, CoCa unifies the capabilities of single-encoder, dual-encoder, and encoder-decoder paradigms under a minimalist design, thereby advancing the potential for visual and vision-language applications.

Technical Approach

CoCa incorporates two primary training objectives into a single encoder-decoder architecture: a contrastive loss on stored image-text pairs and a captioning loss to predict text tokens autoregressively. By doing so, CoCa synthesizes the strengths of both contrastive models like CLIP and generative models akin to SimVLM. This method diverges from traditional encoder-decoder transformers by eliminating cross-attention mechanisms in the initial decoder layers, enabling the construction of unimodal text representations before addressing multimodal image-text representations.

Specifically, the architecture involves:

- Modified Decoder Design: Dividing the decoder into unimodal and multimodal sections. The unimodal section processes text-only representations without cross-attention, while the multimodal section cross-attends to the image encoder to form combined image-text representations.

- Shared Computational Graph: Both contrastive and captioning objectives are derived within a shared computational graph, maximizing efficiency with minimal computational overhead.

Empirical Evaluation

The extensive empirical evaluation demonstrates that CoCa attains state-of-the-art performance across a broad array of tasks:

- Image Recognition: CoCa achieves 86.3% zero-shot top-1 accuracy, 90.6% with a frozen encoder, and 91.0% top-1 accuracy on ImageNet with fine-tuning.

- Crossmodal Retrieval: In tasks including MSCOCO and Flickr30K, CoCa shows superior retrieval metrics while maintaining parameter efficiency.

- Multimodal Understanding: On benchmarks like VQA, SNLI-VE, and NLVR2, CoCa outperforms strong vision-LLMs, setting new performance standards.

- Image Captioning: CoCa excels in image captioning, achieving competitive scores on MSCOCO and new state-of-the-art results on NoCaps.

Design and Implementation Efficiencies

The bifurcation of the decoder and the employment of shared computational graphs allow CoCa to seamlessly handle varied tasks while maintaining efficiency. For zero-shot evaluations, CoCa transparently utilizes aligned unimodal embeddings, thus eliminating the need for downstream fusion adaptations seen in other methods. Moreover, CoCa's architecture gracefully incorporates attentional pooling mechanisms to adapt features for different tasks, which is conducive to both pretraining objectives and downstream task evaluations.

Implications and Future Directions

From a practical standpoint, CoCa’s unified approach significantly reduces pretraining complexity and resource demands by mitigating the need for multiple-stage pretraining on different objectives and datasets. The successful application of both contrastive and generative losses in a shared architecture has broad implications for the advancement of foundation models capable of seamless integration and self-supervision across diverse datasets.

Looking forward, CoCa’s framework presents several avenues for future advancements:

- Refinement of Architectural Components: Further optimization of unimodal and multimodal segmentations within the decoder could enhance efficiency and performance.

- Expansion to Other Modalities: Applying the CoCa architecture to new modalities, such as audio-text integration, could enable robust multimodal learning scenarios.

- Extended Crossmodal Retrieval: Detailed evaluation of CoCa for additional crossmodal retrieval tasks, especially those involving video and real-time interaction, can further validate its versatility.

Conclusion

The introduction of CoCa marks a significant step towards the unification of preexisting models into a single streamlined architecture capable of addressing diverse vision and vision-language tasks. By adeptly combining contrastive and captioning losses within an efficient computational framework, CoCa sets a high bar for subsequent foundation models in the field, paving the way for more versatile and efficient image-text processing systems.